Abstract

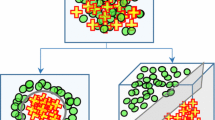

In this paper, a dual online subspace-based learning method called dual-generalized discriminative common vectors (Dual-GDCV) is presented. The method extends incremental GDCV by exploiting simultaneously both the concepts of incremental and decremental learning for supervised feature extraction and classification. Our methodology is able to update the feature representation space without recalculating the full projection or accessing the previously processed training data. It allows both adding information and removing unnecessary data from a knowledge base in an efficient way, while retaining the previously acquired knowledge. The proposed method has been theoretically proved and empirically validated in six standard face recognition and classification datasets, under two scenarios: (1) removing and adding samples of existent classes, and (2) removing and adding new classes to a classification problem. Results show a considerable computational gain without compromising the accuracy of the model in comparison with both batch methodologies and other state-of-art adaptive methods.

Similar content being viewed by others

Notes

For more details see the “Appendix”

References

Chandra, B., Sharma, R.K.: Fast learning in deep neural networks. Neurocomputing 171, 1205–1215 (2016). https://doi.org/10.1016/j.neucom.2015.07.093

Chu, D., Liao, L., Ng, M., Wang, X.: Incremental linear discriminant analysis: a fast algorithm and comparisons. IEEE Trans. Neural Netw. Learn. Syst. 26(11), 2716–2735 (2015)

Deng, J., Dong, W., Socher, R., Li, L.-J., Li, K., Fei-Fei, L.: Imagenet: a large-scale hierarchical image database. In: IEEE Conference on Computer Vision and Pattern Recognition, 2009. CVPR 2009, pp. 248–255. IEEE (2009)

Diaz-Chito, K., Ferri, F.J., Díaz-Villanueva, W.: Null space based image recognition using incremental eigendecomposition. In: Vitriá, J., Sanches, J.M., Hernández, M. (eds.) Pattern Recognition and Image Analysis, vol. 6669, pp. 313–320. Springer, Berlin, Heidelberg (2011)

Diaz-Chito, K., Ferri, F., Diaz-Villanueva, W.: Incremental generalized discriminative common vectors for image classification. IEEE Trans. Neural Netw. Learn. Syst. 26(8), 1761–1775 (2015)

Duan, G., Chen, Y.: Batch-incremental principal component analysis with exact mean update. In: 2011 18th IEEE International Conference on Image Processing (ICIP), pp. 1397–1400 (2011)

Ferri, F., Diaz, K., Díaz, W.: Efficient dimensionality reduction on undersampled problems through incremental discriminative common vectors. In: The 10th IEEE International Conference on Data Mining Workshops, ICDM Workshops, pp. 1159–1166 (2010)

Ferri, F., Diaz-Chito, K., Diaz-Villanueva, W.: Fast approximated discriminative common vectors using rank-one SVD updates. Neural Inf. Process. 8228, 368–375 (2013)

Gao, W., Cao, B., Shan, S., Chen, X., Zhou, D., Zhang, X., Zhao, D.: The CAS-PEAL large-scale Chinese face database and baseline evaluations. IEEE Trans. Syst. Man Cybern. A Syst. Hum. 38(1), 149–161 (2008)

Hall, P., Marshall, D., Martin, R.: Incremental eigenanalysis for classification. In: British Machine Vision Conference, pp. 286–295 (1998)

Hall, P., Marshall, D., Martin, R.: Merging and splitting eigenspace models. IEEE Trans. Pattern Anal. Mach. Intell. 22(9), 1042–1049 (2000)

Jin, B., Jing, Z., Zhao, H.: EVD dualdating based online subspace learning. Math. Probl. Eng. 429451, 21 (2014)

Kim, T., Kenneth, K., Stenger, B., Kittler, J., Cipolla, R.: Incremental linear discriminant analysis using sufficient spanning set approximations. In: IEEE Conference on Computer Vision and Pattern Recognition, 2007. CVPR ’07, pp. 1–8 (2007)

Kim, T., Stenger, B., Kittler, J., Cipolla, R.: Incremental linear discriminant analysis using sufficient spanning sets and its applications. Int. J. Comput. Vis. 91(2), 216–232 (2011)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: ImageNet classification with deep convolutional neural networks. In: Bartlett, P.L., Pereira, F.C.N., Burges, C.J.C., Bottou, L., Weinberger, K.Q. (eds.) Proceedings of the 25th International Conference on Neural Information Processing Systems (NIPS’12), vol. 1, pp. 1097–1105. Curran Associates Inc., Lake Tahoe, NV (2012)

Li, Y.: On incremental and robust subspace learning. Pattern Recognit. 37, 1509–1518 (2004)

Liu, L., Jiang, Y., Zhou, Z.: Least square incremental linear discriminant analysis. In: Ninth IEEE International Conference on Data Mining, 2009. ICDM ’09, pp. 298–306 (2009)

Lu, G., Zou, J., Wang, Y.: Incremental complete LDA for face recognition. Pattern Recognit. 45(7), 2510–2521 (2012)

Lu, G., Zou, J., Wang, Y.: Incremental learning of discriminant common vectors for feature extraction. Appl. Math. Comput. 218(22), 11269–11278 (2012)

Martinez, A., Benavente, R.: The AR face database. Technical report 24, Computer Vision Center CVC (1998)

Nene, S., Nayar, S., Murase, H.: Columbia object image library (coil-20). Technical report (1996)

Ozawa, S., Pang, S., Kasabov, N.: Incremental learning of chunk data for online pattern classification systems. IEEE Trans. Neural Netw. 19(6), 1061–1074 (2008)

Pang, S., Ban, T., Kadobayashi, Y., Kasabov, N.K.: LDA merging and splitting with applications to multiagent cooperative learning and system alteration. IEEE Trans. Syst. Man Cybern. B (Cybern.) 42(2), 552–564 (2012)

Pang, S., Ozawa, S., Kasabov, N.: Incremental linear discriminant analysis for classification of data streams. IEEE Trans. Syst. Man Cybern. B 35(5), 905–914 (2005)

Peng, Y., Pang, S., Chen, G., Sarrafzadeh, A., Ban, T., Inoue, D.: Chunk incremental IDR/QR LDA learning. In: The 2013 International Joint Conference on Neural Networks (IJCNN), pp. 1–8 (2013)

Phillips, J., Wechsler, H., Huang, J., Rauss, P.: The FERET database and evaluation procedure for face-recognition algorithms. Image Vis. Comput. 16(5), 295–306 (1998)

Ren, C., Dai, D.: Incremental learning of bidirectional principal components for face recognition. Pattern Recognit. 43(1), 318–330 (2010)

Ross, D., Lim, J., Lin, R., Yang, M.: Incremental learning for robust visual tracking. Int. J. Comput. Vis. 77(1–3), 125–141 (2008)

Sim, T., Baker, S., Bsat, M.: The CMU pose, illumination, and expression (PIE) database. In: Proceedings of the 5th International Conference on Automatic Face and Gesture Recognition (2002)

Uray, M., Skocaj, D., Roth, P.M., Bischof, H., Leonardis, A.: Incremental LDA learning by combining reconstructive and discriminative approaches. In: Proceedings of the British Machine Vision Conference, pp. 44.1–44.10 (2007)

Zeng, X., Li, G.: Covariance free incremental principal component analysis with exact mean update. J. Comput. Inf. Syst. 5(16), 181–192 (2013)

Zhao, H., Yuen, P.: Incremental linear discriminant analysis for face recognition. IEEE Trans. Syst. Man Cybern. B 38(1), 210–221 (2008)

Zhao, H., Yuen, P., Kwok, J.T.: A novel incremental principal component analysis and its application for face recognition. IEEE Trans. Syst. Man Cybern. B 36, 873–886 (2006)

Zheng, W., Tang, X.: Fast algorithm for updating the discriminant vectors of dual-space LDA. IEEE Trans. Inf. Forensics Secur. 4(3), 418–427 (2009)

Acknowledgements

This work was supported by the project TIN2014-52072-P of the Spanish Ministry of Economy, Industry and Competitiveness with FEDER funds and the CERCA Programme/Generalitat de Catalunya.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix

Decomposition of \(S_w^{{\widetilde{X}}}\)

The within-class scatter matrix of each training set is defined as

such that

where

From the above expressions,

with

If the classes in \(I\) are different from the classes in \(X\),

Rights and permissions

About this article

Cite this article

Diaz-Chito, K., Martínez del Rincón, J., Rusiñol, M. et al. Feature Extraction by Using Dual-Generalized Discriminative Common Vectors. J Math Imaging Vis 61, 331–351 (2019). https://doi.org/10.1007/s10851-018-0837-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10851-018-0837-6