Abstract

Assessing or predicting seismic damage in buildings is an essential and challenging component of seismic risk studies. Machine learning methods offer new perspectives for damage characterization, taking advantage of available data on the characteristics of built environments. In this study, we aim (1) to characterize seismic damage using a classification model trained and tested on damage survey data from earthquakes in Nepal, Haiti, Serbia and Italy and (2) to test how well a model trained on a given region (host) can predict damage in another region (target). The strategy adopted considers only simple data characterizing the building (number of stories and building age), seismic ground motion (macroseismic intensity) and a traffic-light-based damage classification model (green, yellow, red categories). The study confirms that the extreme gradient boosting classification model (XGBC) with oversampling predicts damage with 60% accuracy. However, the quality of the survey is a key issue for model performance. Furthermore, the host-to-target test suggests that the model’s applicability may be limited to regions with similar contextual environments (e.g., socio-economic conditions). Our results show that a model from one region can only be applied to another region under certain conditions. We expect our model to serve as a starting point for further analysis in host-to-target region adjustment and confirm the need for additional post-earthquake surveys in other regions with different tectonic, urban fabric and socio-economic contexts.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The primary goal of seismic risk studies is to safeguard people’s safety and preserve their source of sustenance by minimizing earthquake damage. A key component of the process is a comprehensive seismic damage assessment. Building damage modelling involves a definition of the hazard affecting the target region (i.e., expected frequency and intensity of ground shaking), a definition of exposure (classification of the buildings in the region of interest), and a definition of the vulnerability of the assets exposed to hazards (i.e., likelihood of damage or loss) (Silva et al. 2022). However, seismic damage estimation using conventional methods is challenging on a regional scale due to the lack of information required to characterize the building typologies and associated vulnerability functions. Such information is often sparse, incomplete, or available at low resolution, and building-by-building surveys to collect such details are impractical due to the time and resources involved (Riedel et al. 2015).

In this context, the use of machine learning methods for damage assessment, as initiated by Riedel et al. (2015), offers a change of paradigm by enabling cost-effective damage assessment relying on readily available data, such as those provided in the national census, for example. Machine learning methods involve mapping building features to damage levels via supervised learning algorithms to define a predictive model, which can then evaluate potential damage in other portfolios with similar features and for a given seismic ground motion. Furthermore, such methods offer superior computational efficiency, easy handling of complex problems, and the incorporation of uncertainties (Salehi and Burgueño 2018; Hegde and Rokseth 2020; Xie et al. 2020; Stojadinović et al. 2021). Numerous recent studies (e.g. among others, Mangalathu et al. 2020; Roeslin et al. 2020; Stojadinović et al. 2021; Harirchian et al. 2021; Ghimire et al. 2022, 2023) have evaluated the effectiveness of damage prediction of different machine learning models using a post-earthquake building damage dataset from a given region. These studies concluded that machine learning models using basic building features such as age, number of stories, floor area, height, and ground-motion related parameters (e.g., macro-seismic intensity, peak ground acceleration, spectral acceleration) can provide reasonable damage estimates, thus facilitating cost-effective damage assessment on a regional scale, without the need to define commonly used regional vulnerability or fragility functions.

However, many cities located in moderate-to-high seismic risk regions with significant vulnerabilities struggle to develop regional vulnerability models based on machine learning methods due to insufficient damage datasets characterizing these cities because of the small number of recent earthquakes. In such situations, seismic damage estimation could be carried out by transferring the damage prediction models trained in a host region with sufficient data to a target region (Roca et al. 2006; Guéguen et al. 2007). However, region-specific characteristics, such as regional materials, building design, progress in seismic regulation and hazard levels, can significantly influence damage assessment models and ultimately bias the host-to-target adjustment in different regions that must be analyzed (Ghimire et al. 2023).

Access to exposure data and post-seismic damage observations for different hazard contexts and exposure models in different regions has improved in recent years (e.g., NPC 2015; Dolce et al. 2019; Stojadinović et al. 2021; MTPTC 2010). This increase in data can be used to address two issues: (a) How effective are machine learning models trained on aggregated datasets from earthquakes and regions, regardless of the exposure and hazard context, when it comes to damage assessment? (b) How accurately can machine learning models, trained on specific regions and earthquakes (host), predict seismic damage in other regions (target) with varying contextual attributes? This study aims to investigate these two issues using post-earthquake building damage surveys from the 2015 Nepal earthquake (NPC 2015), the 2010 Haiti earthquake (MTPTC 2010), the 2010 Serbia earthquake (Stojadinović et al. 2021) and the database of observed damage (DaDO) from several Italian earthquakes (Dolce et al. 2019). First, damage prediction efficacy is tested using a model trained on aggregated datasets at both building and portfolio levels for all the earthquakes considered. Then, host-to-target tests of machine learning models are analyzed considering several different datasets for training and testing.

This manuscript is structured as follows: Sect. 2 presents the datasets used for machine learning development, Sect. 3 describes the machine learning (ML) method used in this study and previously tested and validated in peer-review papers, Sect. 4 demonstrates the efficacy of the selected method and the limitations of the host-to-target adjustments under several conditions, and Sect. 5 contains a discussion and our conclusions.

2 Data

The data used in this study come from post-seismic surveys carried out after several major earthquakes: the Mw7.8 Nepal earthquake of 2015, the Mw7.0 Haiti earthquake of 2010, the Mw5.4 Serbia earthquake of 2010, and a series of Italian earthquakes about which information was provided in a national database of observed damage (DaDO, Dolce et al. 2019). A general description of these databases is given below, but details of their contents can be found in the references mentioned. A more detailed description of the Haiti earthquake information is nevertheless provided here, as no description of this database has yet been published.

The Nepal earthquake building damage portfolio (NBDP) concerns the Mw7.8 2015 earthquake, which damaged thousands of residential buildings, killing nearly 9,000 people and injuring more than 22,000 (NPC 2015). After the earthquake, the Nepalese authorities decided to lead an extensive post-earthquake survey in the eleven most severely affected regions around Kathmandu. The survey included a visual screening of building features and damage levels by experts, and the information was compiled to form the NBDP database used in this study (NPC 2015). The NBDP database contains information on 762,106 buildings, including details of the main structural features. Damage is classified into five damage grades (DG1 to DG5), in line with the EMS-98 damage classification (Grünthal 1998).

The Serbia earthquake building dataset (SBDP) was set up after the Mw5.4 Kraljevo earthquake in 2010 for the purpose of reconstruction planning and resilience. This earthquake caused 2 fatalities and almost 6,000 structures suffered damage, 75% of which were classified as suitable for immediate occupancy (Stojadinović et al. 2021). Several weeks after the earthquake, local and regional experts performed damage inspections and assessments, resulting in a documented report of the recovery process, including monitoring of the damage inspections. The final dataset, which also includes undamaged buildings, is published with open access (RELA 2023) and contains basic building features and the damage grade according to the EMS-98 damage classification (Stojadinović et al. 2021).

The database of observed damage in Italy (DaDO) is a collection of post-earthquake building damage surveys following several Italian earthquakes between 1976 to 2019, developed by the Eucentre Foundation for Civil Protection Department (Dolce et al. 2019). This database includes information on building shape and design, the built environment and observed damage. A framework was applied by Dolce et al. (2019) to homogenize the information collected and to translate the damage information into the EMS-98 scale (Grünthal 1998). A more detailed description of the DaDO can be found in Dolce et al. (2019). In this study, we selected building damage data from seven earthquakes previously selected by Ghimire et al. (2023), as summarized in Table 1.

Finally, the Haiti earthquake building damage dataset (HBDP) corresponds to post-earthquake building damage survey data collected after the Mw7 earthquake in 2010 (MTPTC 2010). This earthquake caused over 300,000 casualties, left more than 1.3 million people homeless, and resulted in estimated losses of US$7–14 billion, exceeding Haiti’s gross domestic product (DesRoches et al. 2011). The government of Haiti conducted a massive post-earthquake damage survey with the help of more than 300 trained engineers, assisted by third-party structural engineers, and developed a database of observed damage (MTPTC 2010). The ATC-20 methodology (ATC 2005) adopted for damage classification groups information into seven discrete classes based on visual observation (none for no damage DG0, slight for 0–1% damage DG1, light for 1–10% damage DG2, moderate for 10–30% damage DG3, heavy for 30–60% damage DG4, major for 60–100% damage DG5 and destroyed for 100% damage DG6). Building features were collected at the same time: number of stories (i.e., total number of floors above the ground surface), age of building (i.e., time difference between the date of the earthquake and the date of building construction/renovation, grouped into four categories: 0–10 years, 11–25 years, 26–50 years, and > 50 years), floor plan (i.e., geometric shape defining the building plan as E-shape, H-shape, L-shape, O-shape, Rectangular-shape, T-shape, U-shape, or Other-shape), wall type (i.e., materials used for vertical structural elements and defined as block-masonry with reinforcement, block-masonry without reinforcement, brick-masonry, reinforced concrete, stone-masonry, wood-masonry, and others), structure type (i.e., material used for vertical structural elements and defined as reinforced concrete structures, load-bearing wall structures, steel sheet-metal structures, and wood sheet-metal structures), and floor type (i.e., materials used for horizontal structural elements and defined as reinforced concrete floor, concrete floor, and wooden floor). The location of each building is also indicated (latitude and longitude).

In this study, we seek to test host-to-target adjustment from one region (or earthquake) to another. We therefore selected the input (ground motion and building features) and target (damage) parameters homogeneously, to enable transposition from one region to another. Riedel et al. (2015) and, more recently, Ghimire et al. (2022, 2023) have shown the relevance of using only a few building parameters: number of stories and age of building. Although incomplete, the proposed building representation does not include explicit information about structural features, seismic design and/or soil condition, but it offers the advantages of (1) being evaluated deterministically without introducing bias between regions in the definition of the exposure model, (2) implicitly integrating some hidden regional structural information (e.g., tall buildings built after the year 2000 are probably made of concrete), and (3) according to tests performed by Ghimire et al. (2022, 2023), enabling damage assessment with > 60% accuracy. The number of stories is the total number of floors above the ground surface. To avoid bias related to some specific regional structural features, we only selected buildings with fewer than 10 stories, classified into three categories: 1–3, 4–6 and > 6 storeys. The building age was calculated from the construction date to the date of the earthquake and put into four classes: 0–20, 21–40, 41–60 and > 60 years. No additional data-cleaning methods were implemented. The final NBDP/HBDP/SBDP databases contain 757,362/353,534/1,949 buildings respectively, and the DaDO:E1/E2/E3/E4/E5/E6/E7 databases contain 37,828/9,440/6,391/ 238/37,994/10,577/1,472 buildings with complete information on both structural features.

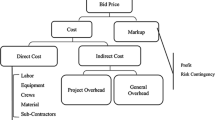

The original damage information from NBDP, DaDO, HBDP, and SBDP is classified into three damage grades according to the severity of the damage, consistent with the traffic light damage classification system commonly used in post-earthquake damage surveys: green for none-to-slight damage, yellow for moderate-to-heavy damage, and red for very-heavy damage to collapse. Except NBDP, all datasets have information on undamaged buildings, classified as green damage grade. Ghimire et al. (2022, 2023) showed a significant improvement in the accuracy of damage assessments when moving from a classification of five grades of damage (i.e., EMS-98) to three grades, interpreted as being due to the difficulty of distinguishing between intermediate grades (between DG3 and DG4, for example) and considering the undamaged buildings (Ghimire et al. 2023). Table 2 and Fig. 1 show the distribution of building features and damage grades across each dataset. The NBDP contains a higher percentage of buildings in the red class (60.51%), while the DaDO (69.60%), HBDP (42.17%), and SBDP (83.63%) have a higher proportion of buildings in the green class. Most buildings in the datasets are low-rise structures (1–3 stories), which represent 98.93% in NBDP, 85.81% in DaDO, 99.73% in HBDP, and 99.85% in SBDP. The predominant building age ranges are 0–20 years for NBDP (63.08%) and HBDP (83.83%), 41–60 years for DaDO (34.16%), and 21–40 years for SBDP (37.81%), which may reflect the urbanization history of these regions and, implicitly, some specific regional structural features. Finally, the aggregated dataset (All = NBDP + HBDP + SBDP + DaDO) includes 98.05% of low-rise buildings, 64.94% of buildings less than 20 years old, and 45.23% of buildings in the red damage class.

Distribution of different features in the NBDP (grey bar), DaDO (orange bar), HBDP (yellow bar), and SBDP (purple bar) databases. The y-axis is the percentage distribution, and the x-axis shows (a) Damage grade (G: green; Y: yellow; and R: red), (b) Building age (AG1: 0–20 years; AG2: 21–40 years; AG3: 41–60 years; AG4: > 60 years), (c) Number of stories (NS1: 1–3 stories; NS2: 3–6 stories; and NF3: 7–10 stories), and (d) Macro-seismic intensity (MSI)

Furthermore, the ground motion of the mainshocks is integrated into the database as the macro-seismic intensity (MSI) published by the United States Geological Survey (USGS) ShakeMap (Wald et al. 2005). MSI accounts for spatially distributed ground motion, given in terms of modified Mercalli intensities considered and assigned to buildings based on their geographic location. Other parameters characterizing seismic motion could have been selected (e.g., peak values, spectral response values, etc.), but ShakeMap macroseismic intensities are evaluated in the same manner regardless of the earthquake for host-to-target testing and, at the macroscopic level, integrate site conditions that can modify the local hazard.

3 Method

In this study, the input features comprise the number of stories, building age, and MSI, while the target feature is the damage grade. The imbalanced nature of the data in the dataset considered requires the use of rebalancing associated with an efficient ML-based method. Following the recommendation of Ghimire et al. (2023), who compared the efficacy of several ML-based methods, the extreme gradient boosting classification (XGBC) method (Chen and Guestrin 2016) is used in this study, with the hyperparameter provided in Ghimire et al. (2023) (i.e., n_estimators = 1000; max_depth = 10; learning_rate = 0.01). The random oversampling method, which consists of replacing the number of data entries in each minority class to match the data entries in the majority class, is applied to address the imbalanced distribution of features, confirmed by Ghimire et al. (2023) as being the most relevant for this application.

The models are developed using the scikit-learn package developed in Python (Pedregosa et al. 2011). The damage prediction effectiveness of the XGBC model is first analyzed considering each dataset of specific-earthquake damage. The dataset is randomly partitioned into subsets: training set (60% of the dataset) and testing set (40% of the dataset). The training sets are used for model training, and the test set (kept hidden during model training) is used to test the effectiveness of damage prediction. Model predictions are compared with the damage observed at both building and portfolio levels.

First, at the building level, model efficacy is assessed by error distribution \({\varepsilon }_{d}\) calculated using Eq. (1), as follows:

where \({n}_{e}\) is the total number of buildings at a given error level (difference between observed and predicted damage grades), \(N\) is the total number of buildings in the portfolio. A 100% distribution of εd (%) centered on zero-error values indicates the most efficient model. In this study, error is also represented by damage grade according to the traffic light system (green, orange, red).

Second, at the portfolio level, the buildings are grouped according to damage grade, and damage prediction effectiveness is assessed by computing the mean absolute error (MAE) using Eq. (2), as follows:

where \({n}_{{DG}_{obs}}\) and \({n}_{{DG}_{pred}}\) represent the total number of observed and predicted buildings in each damage grade (green, orange, red), respectively, and N is the total number of buildings in the portfolio. An MAE value close to 0 represents the most efficient model. In this study, damage prediction is evaluated at the building level using Eq. 1 and at the portfolio level using Eq. 2.

The XGBC classification model is first trained on each specific-earthquake damage portfolio, then tested in the same region, randomly selecting 60% and 40% of each dataset for training and testing, respectively; this is the specific-earthquake model. In the second step, a model (named the aggregated-earthquake model) is trained on the building damage dataset from the entire specific-earthquake portfolio. Again, XGBC is trained and tested on 60% and 40% of the data respectively. However, the training dataset is made up of the sum of the 60% sets of data randomly selected from each specific-earthquake dataset, and the remaining 40% sets are merged to form the test dataset. In this way, the distribution of features in each portfolio is considered to be respected.

Finally, several XGBC models are trained using 100% of the building damage portfolios from one region (host) and tested on 100% of another dataset (target). Given the significant differences in building design portfolios between the countries concerned, damage prediction is also analyzed with two socio-economic indexes: (1) the gross domestic product (GDP) per capita in US dollars used by the World Bank (https://data.worldbank.org/indicator/NY.GDP.PCAP.CD) to classify the level of economic development of nations and computed herein as the median value over the years before the earthquake (Fig. 2a); (2) the human development index (HDI) used by the United Nations Development Programme (https://hdr.undp.org/data-center/country-insights#/ranks) as a composite measure of human development ranging from 0 to 1, that includes life expectancy, education and standards of living, considered herein for the year before the earthquake (Fig. 2b).

4 Results

4.1 Specific-earthquake damage model

Figure 3 shows the effectiveness of the XGBC model considering the aforementioned specific-earthquake portfolios plus the specific DaDO:E5 earthquake, i.e. the 2009 L’Aquila earthquake with the largest number of building surveys. Damage prediction accuracy computed at the building level (Eq. 1) including all damage grades (Fig. 3a) indicates that the XGBC model correctly classified 67%, 46%, 66%, 57%, and 59% of buildings for NBDP, HBDP, SBDP, DaDO, and DaDO:E5, respectively. The accuracy scores for NBDP and SBDP are very similar to the accuracy scores reported by Riedel et al. (2015) (62%), Mangalathu et al. (2020)(66%), Roeslin et al. (2020)(67%), Harirchian et al. (2021)(65%) in similar tasks using different machine learning models with varying input features. DaDO and DaDO:E5 score less than 60% because of the imbalanced data (Ghimire et al. 2023). Figure 3b shows the probability density function fitted to the building-level error distribution (Fig. 3a). The XGBC model trained on NBDP exhibits a positive value at peak (0.20), implying underprediction of damage. Conversely, models trained on HBDP, DaDO, DaDO: E5, and SBDP (with negative values at peaks of −0.08, −0.34, −0.37, −0.31, respectively) imply overprediction of damage. As also reported for DaDO by Ghimire et al. (2023), these results implicitly reflect the confusion caused by the simplification of the input features considered herein for the model.

Efficacy of the specific-earthquake damage model. (a) Error values (Eq. 1), (b) Probability distribution function, (c) Observed (obs) and predicted (pred) number of buildings in each damage grade (green, orange, and red), and (d) Mean error values (Eq. 2). In (a) and (b), the x-axis represents incremental error in damage grades (difference between observed and predicted, regardless of grade considered). The y-axis denotes (a) error values \({\varepsilon }_{d}\) in %, (b) probability density fitted to the error values, and (d) mean absolute error (MAE) values in percentage. In (c), the x-axis is the distribution of buildings in each damage grade (green, orange, red)

Furthermore, Fig. 3c shows the distribution of observed and predicted numbers of buildings by damage grade and Fig. 3d shows the corresponding MAE values (Eq. 2) at building portfolio level. The portfolio level model is more effective at aggregating buildings according to damage level than the building level model. For example, in the HBDP dataset with 46% accuracy in building level damage prediction (Fig. 3a), a low MAE value is observed (3.5%), indicating a similar distribution between observed and predicted damage grades, as also shown in Fig. 3c. For other portfolios, MAE values range between 7% and 15%, with a slight overprediction of the green grade in Fig. 3c, which might be attributed to the use of the random oversampling method to address class imbalance issues.

In conclusion, the accuracy scores remain more than satisfactory for the effort required to characterize the building portfolio (i.e., two basic building features and MSI for ground motion), given that the objective of the model is damage classification based on a traffic-light system. The effectiveness of the machine learning model at predicting damage at the building level is notably influenced by the datasets used for training and testing.

4.2 Aggregated-earthquake damage model

The distribution of the damage portfolio of the aggregated-earthquake dataset is imbalanced because of the amount of data in NBDP. Two XGBC models are therefore considered: XGBC1 without addressing the data imbalance and XGBC2, which addresses the imbalanced distribution of data in the aggregated-earthquake training set. In XGBC2, the data imbalance is addressed using a resampling technique, i.e., randomly replacing data points from minority building damage portfolios (HBDP, DaDO and SBDP) to achieve the same number of data points as the majority portfolio (NBDP) in the aggregated-earthquake training dataset. The test is then performed on the 40% remaining data of the entire portfolio (referenced as ALL in Fig. 4) and for each specific earthquake.

Efficacy of the aggregated-earthquake damage model at building level represented by the error value \({\varepsilon }_{d}\) (Eq. 1) for the XGBC model trained on 60% of aggregated-earthquake data and tested on the remaining 40% of data in the aggregated-earthquake dataset (ALL) and specific-earthquake dataset. (a) XGBC1 not addressing and (b) XGBC2 addressing data distribution imbalance. The color scale is applied to the values in each cell. The x-value represents the difference between observed and predicted damage grades, regardless of the damage grade considered

Figure 4 shows the efficacy of the model at the building level, measured through error distribution (Eq. 1) for XGBC1 and XGBC2 tested on both aggregated- (ALL) and specific-earthquake data. XGBC1 (Fig. 4a) shows accuracy values equal to 60%, 65%, 77%, and between 61% and 81% for ALL, NBDP, SBDP, and specific DaDO datasets, respectively, i.e., almost the same accuracy scores as with the specific-earthquake model. Building level accuracy is also lower (46%) for HBDP, which confirms the regional specificity of the Haiti building portfolio compared with other countries that cannot be predicted by the model. XGBC1 tends to underpredict damage levels, particularly for HBDP and DaDO (majority of x-values in + 1: 11–37%), which reflects the major contribution of NBDP to the aggregated training dataset (see Tab. 1). XGBC2 slightly improves building-level damage prediction efficacy: e.g., accuracy values in 0 are 63% for ALL, 81% for SBDP, 69% for NBDP, and between 61% and 84% for DaDO specific-earthquake portfolios (Fig. 4b). Classification errors are mainly within ± one range of the actual damage grade (see x-value in ± 1 in Fig. 4a and b). At building level, merging datasets from several regions with different conceptual characteristics (i.e., different portfolios) does not significantly improve the damage model, regardless of whether imbalanced data is addressed or not.

However, the benefit of addressing imbalanced data distribution is clearly highlighted in the portfolio-level assessment (Fig. 5). In this case, damage classification is improved with XGBC1 (Fig. 5a), corresponding to MAE values of 8.7% for ALL, 12% to 21% for specific-DaDO-earthquakes, reaching 26.2% for HBDP (Fig. 5b). However, for NBDP and SBDP, XGBC1 yields lower MAE values of 2.1% and 4.1% respectively, reflecting a good match with actual observations from the field. In contrast, XGBC2 notably improves the classification of yellow and red damage grades (Fig. 5b), leading to lower MAE values: 3.2% for ALL, between 5% to 13% for specific-DaDO-earthquakes, and 11.4% for HBDP. However, the MAE value increases slightly (6.8%) for NBDP.

Efficacy of the aggregated-earthquake damage model at portfolio level. (a) Observed (obs) and predicted (pred) number of buildings in each damage grade (green, orange, and red) with (XGBC2) and without (XGBC1) addressing imbalanced data, and (b) Mean error values (Eq. 2) for models XGBC1 and XGBC2. In (a), the x-axis is the distribution of buildings in each damage grade (green, orange, red)

In conclusion, the aggregated-earthquake model, trained using data from several regions with diverse building portfolios and damage intensity distributions, provides results similar to those of the specific-earthquake model. At the portfolio level, accuracy is improved by addressing the imbalance issue and considering the whole dataset (ALL in Fig. 5b).

4.3 Host-to-target test

In this section, the XGBC models are trained using 100% of the portfolio from the earthquake-specific dataset (host) and tested on 100% of the portfolio from other earthquake-specific datasets (target). Figure 6 shows the efficacy of damage prediction with several representations and different host-to-target tests. For example, using DaDO and SBDP as host or target results in the best damage accuracy (68.8% and 56.1%) at the building level (Fig. 6a) and the best MAE value (4.8% and 7.9%) at portfolio level (Fig. 6c). The worst prediction accuracy is observed for the portfolios that we assume to be conceptually different, e.g., DaDO and NBDP, with 15.8% and 23.23% at the building level (Fig. 6a) and MAE of 51.8% or 39.9% at portfolio level (Fig. 6c). Coherent results are observed considering the observed and predicted values of each damage grade (Fig. 6b). As already observed, the Haiti dataset fails to perform host-to-target adjustment effectively, regardless of the associated portfolio considered, which confirms the specificity of the Haitian portfolio.

Host-to-target adjustment damage model at building level through error value Eq. 1 (a), considering observed (obs) and predicted (pred) building damage in each damage grade (green, yellow, and red) at portfolio level (b), and MAE values (Eq. 2) at portfolio level (c) for several host-to-target testing configurations. For each row, the host portfolio is indicated at the top of each plot (b) and the target portfolio on the y-axis for (a) and (b) and on the x-axis for (c). In (a), the color scale is applied to the values in each cell

To enable implicit consideration of the regional design of each portfolio, MAE values are represented for all host-to-target adjustments with respect to socio-economic indexes (Fig. 7). The smaller MAE values correspond to the values of host-to-target GDP per capita (Fig. 7a) and HDI (Fig. 7b) ratio close to 1: with GDP ratio between 0.1 and 10, the mean value of MAE is 14.28 (44.99 otherwise) and with HDI ratio between 0.8 and 1.2, the mean value of MAE is 13.18 (43.62 otherwise). The building portfolio characteristics (construction quality, regional typologies, seismic regulation implementation) implicitly considered through the socio-economic index affect the efficacy of the host-to-target adjustment. Thus, the machine learning models trained on specific regions (or portfolios) can be adjusted to other specific regions with similar regional contextual characteristics.

Distribution of mean absolute error (MAE) values (Eq. 2) in relation to the ratio of (a) GDP per capita and (b) HDI between host and target regions. Vertical dashed lines correspond to the lowest values of MAE centered on ratio 1

5 Discussion and conclusion

Thanks in part to the need to publish, documents and reference scientific data (FAIR principle, Wilkinson et al. 2016) sharing post-seismic observations enable the testing of certain new approaches based on AI-derived methods for damage prediction. Recent studies have evaluated the relevance of these approaches to specific earthquake datasets, focusing on the performance of specific machine learning methods, the benefits of addressing data balancing, which concerns most datasets and affects model performance, the importance and relevance of input features (i.e., building features, ground motion, damage classification) and the comparison of damage predictions with more conventional methods (e.g., Riedel et al. 2015; Mangalathu et al. 2020; Roeslin et al. 2020; Stojadinović et al. 2021; Harirchian et al. 2021; Ghimire et al. 2022, 2023). Such AI-derived methods are efficient because a common structural behavior has a part of their DNA sequence in common (i.e., building features and response), despite different datasets and sequencing methods (i.e., post-seismic surveys). Starting with recent results obtained by Ghimire et al. (2022, 2023) on the model used and the parameters considered, this study goes one step further by assessing the transfer of a model trained on one region and tested on/applied to another region. The overall objective was to assess the extent to which a host model could be deployed in a target region for which no specific data were available for training (e.g., moderate seismic prone region).

Riedel et al. (2015) and Ghimire et al. (2022) have shown that an exhaustive description of building features improves AI-based damage prediction. The same is probably true for seismic hazard characterization, but considering datasets from different contexts implies the convergence of available information. In the first step, the datasets were adjusted to a common reference in terms of (1) features characterizing the building portfolio (i.e., number of floors and age of construction), (2) ground motion (i.e., the macroseismic intensity provided by USGS ShakeMap), and (3) damage classification (i.e., according to a traffic light type scale of damage). The performance of the XGBC model considered herein, trained and tested on balanced earthquake-specific datasets (i.e., the 2010 Haiti earthquake, the 2015 Nepal earthquake, the 2010 Serbia earthquake and a series of damaging Italian earthquakes) was assessed at both building and portfolio levels. We confirmed the classification of damage of the order of 60%, as reported by Riedel et al. (2015), Mangalathu et al. (2020), Roeslin et al. (2020), Stojadinović et al. (2021), Harirchian et al. (2021) and Ghimire et al. (2022, 2023). The Haiti earthquake is the only one to achieve a lower classification (around 45%): although we cannot be sure of the origin of this misclassification, the quality of the survey characterizing the building features and damage attributes is nevertheless put forward as a possible explanation. However, model performance improves at the portfolio level, particularly for Haiti, with a better fit between prediction and observation by damage level.

By aggregating the datasets, model training benefits not only from more data per damage level, but also from a wider sample of construction types and MSI. Indeed, by using only the number of stories and age of construction, design information is implicitly (but roughly) considered. By merging the data sets, the seismic response of buildings with the same characteristics is assumed to be nominally identical. Although this is a significant approximation, it remains the basic and necessary principle of global vulnerability assessment methods, which consist of assigning a generic model (or fragility curve) to each building class in the building taxonomy. In the case presented here, data aggregation for training only slightly improves model performance, although some tests on earthquake-specific datasets are significantly improved (e.g. SBDP). At the portfolio level, the performance (MAE) of the model is also superior to that of the earthquake-specific model, particularly when the training data imbalance issue is addressed. For example, classification accuracy rises from 59.2% and 65.7% for DaDO:E5 and SBDP, respectively, to 77.02% and 81.4% at the building level. Aggregating the datasets brings a moderate improvement due to the diversity of building portfolios that have been aggregated and the variability of building responses when considering only the number of floors and the age of construction. However, when training on 60% and testing on 40% of the aggregated-earthquake data set, performance at portfolio level is 3.2% (MAE) and performance at building level 64% (εd), i.e., the same order of magnitude as in previous studies.

Finally, the host-to-target adjustment highlights the need for training and testing on a homogeneous dataset since portfolio diversity is a factor that limits model performance. When training the model on the NBDP (Nepal) and testing on very different contextual environments (e.g., DaDO for Italy or SBDP for Serbia), the model fails to predict damage on the only features considered in each portfolio (εd < 30% and MAE > 40%). The same observation is made when host and target data are inverted. However, for equivalent contextual environments, assimilated here to GDP per capita and HDI, model performance improves. It is thus possible to predict DaDO damage by training the model on SBDP data with reasonable accuracy: εd value of 68.8% or 56.1% at building level and MAE value of 4.8% or 7.9% at portfolio level. This opens the possibility of using the model in moderate seismic prone regions with no datasets of damaged buildings, and thus anticipating the impact of an earthquake.

In conclusion, this study confirms over 60% accuracy for damage estimation, using basic building features and a traffic-light system damage scale. Portfolio quality is essential, as suggested by the poor performance observed on the HBDP (Haiti) dataset. The amount of information used to train the model seems less critical than data quality and the classification of buildings according to criteria that implicitly account for design information. Riedel et al. (2015) showed that model performance improves significantly with the addition of some structural or urban features to the portfolio. In our case, after aggregating different contextual environment portfolios, defined here by GDP per capita and HDI, the model fails to predict losses correctly, as a result of the weakness of these socio-economic indices to fully capture the variability linked to the specific regional structural context (e.g., history of engineering practices, compliance of seismic code…). However, for comparable contextual environments (e.g., construction quality, regional typologies, maturity of seismic regulation implementation, etc.), the host-to-target adjustment can perform well and be used to anticipate damage. Using post-earthquake building survey datasets collected from different regions, further research should assess the effectiveness of the model for host-to-target adjustment, develop and test models that cover broader built-up environments, evaluate additional key features (with the condition of being easily accessible) on which focused to better model damages (e.g., urban fabric information as suggested by Riedel et al. 2015), evaluate the contributions of socioeconomic parameters in the learning phase to improve host-to-target damage model, and explore integration with a global dynamic exposure model (Schorlemmer et al. 2020) to advance this emerging method for seismic damage prediction.

References

ATC (2005) ATC-20–1, Field manual: postearthquake safety evaluation of buildings, 2nd edn. Applied Technology Council, Redwood City, California

Chen T, Guestrin C (2016) XGBoost: a scalable tree boosting system. In: 22nd ACM SIGKDD international conference on knowledge discovery and data mining. pp 785–794

DesRoches R, Comerio M, Eberhard M, et al (2011) Overview of the 2010 Haiti Earthquake. https://doi.org/10.1193/1.3630129

Dolce M, Speranza E, Giordano F et al (2019) Observed damage database of past italian earthquakes: the da.D.O. WebGIS. Boll Di Geofis Teor Ed Appl 60:141–164. https://doi.org/10.4430/bgta0254

Ghimire S, Guéguen P, Giffard-Roisin S, Schorlemmer D (2022) Testing machine learning models for seismic damage prediction at a regional scale using building-damage dataset compiled after the 2015 Gorkha Nepal earthquake. Earthq Spectra. https://doi.org/10.1177/87552930221106495

Ghimire S, Philippe G, Adrien P, et al (2023) Testing machine learning models for heuristic building damage assessment applied to the Italian Database of Observed Damage (DaDO). Nat Hazards Earth Syst Sci Discuss 1–29. https://doi.org/10.5194/NHESS-2023-7

Grünthal G (1998) Escala Macro Sísmica Europea EMS-98. https://www.franceseisme.fr/EMS98_Original_english.pdf, 98, 101 pp. (last access: 29 Sept 2023)

Guéguen P, Michel C, Lecorre L (2007) A simplified approach for vulnerability assessment in moderate-to-low seismic hazard regions: application to Grenoble (France). Bull Earthq Eng 5:467–490. https://doi.org/10.1007/s10518-007-9036-3

Harirchian E, Kumari V, Jadhav K et al (2021) A synthesized study based on machine learning approaches for rapid classifying earthquake damage grades to rc buildings. Appl Sci 11:7540. https://doi.org/10.3390/app11167540

Hegde J, Rokseth B (2020) Applications of machine learning methods for engineering risk assessment – a review. Saf Sci 122:104492. https://doi.org/10.1016/j.ssci.2019.09.015

Mangalathu S, Sun H, Nweke CC et al (2020) Classifying earthquake damage to buildings using machine learning. Earthq Spectra 36:183–208. https://doi.org/10.1177/8755293019878137

MTPTC (2010) Ministere des Travaux Publics, Transports et Communications: Evaluation des Bâtiments. https://www.mtptc.gouv.ht/accueil/recherche/article_7.html

NPC (2015) Post disaster needs assessment. https://www.npc.gov.np/ images/category/PDNA_volume_BfinalVersion.pdf (last access:27 September 2023), 2015

Pedregosa F, Varoquaux G, Buitinck L et al (2011) Scikit-learn. GetMobile Mob Comput Commun 19:29–33. https://doi.org/10.1145/2786984.2786995

RELA RELA Framework Homepage. https://miloskovacevic68.github.io/RELA/. Accessed 29 Sept 2023

Riedel I, Guéguen P, Dalla Mura M et al (2015) Seismic vulnerability assessment of urban environments in moderate-to-low seismic hazard regions using association rule learning and support vector machine methods. Nat Hazards 76:1111–1141. https://doi.org/10.1007/s11069-014-1538-0

Roca A, Goula X, Susagna T et al (2006) A simplified method for vulnerability assessment of dwelling buildings and estimation of damage scenarios in Catalonia, Spain. Bull Earthq Eng. https://doi.org/10.1007/s10518-006-9003-4

Roeslin S, Ma Q, Juárez-Garcia H et al (2020) A machine learning damage prediction model for the 2017 Puebla-Morelos, Mexico, earthquake. Earthq Spectra 36:314–339. https://doi.org/10.1177/8755293020936714

Salehi H, Burgueño R (2018) Emerging artificial intelligence methods in structural engineering. Eng Struct 171:170–189. https://doi.org/10.1016/j.engstruct.2018.05.084

Schorlemmer D, Beutin T, Cotton F, et al (2020) Global dynamic exposure and the OpenBuildingMap - a big-data and crowd-sourcing approach to exposure modeling. EGU2020. https://doi.org/10.5194/EGUSPHERE-EGU2020-18920

Silva V, Brzev S, Scawthorn C et al (2022) A building classification system for multi-hazard risk assessment. Int J Disaster Risk Sci 13:161–177. https://doi.org/10.1007/s13753-022-00400-x

Stojadinović Z, Kovačević M, Marinković D, Stojadinović B (2021) Rapid earthquake loss assessment based on machine learning and representative sampling. Earthq Spectra 38:152–177. https://doi.org/10.1177/87552930211042393

Wald DJ, Worden BC, Quitoriano V, Pankow KL (2005) ShakeMap manual: technical manual, user’s guide, and software guide

Wilkinson MD, Dumontier M, Aalbersberg IJ, et al (2016) Comment: the FAIR guiding principles for scientific data management and stewardship. Sci Data 3. https://doi.org/10.1038/sdata.2016.18

Xie Y, Ebad Sichani M, Padgett JE, DesRoches R (2020) The promise of implementing machine learning in earthquake engineering: a state-of-the-art review. Earthq Spectra 36:1769–1801. https://doi.org/10.1177/8755293020919419

Acknowledgements

We thank Eric Calais from Ecole Normale Supérieure Paris as data access facilitator and Rwendy Carré Head of Statistics and Informatics Unit (Ministère des Travaux Publics, Transports et Communication) of Haiti to provide us information relative to the 2010 Haiti post-earthquake survey of damage. This study was funded by the AXA Research Fund supporting the project New Probabilistic Assessment of Seismic Hazard, Losses and Risks in Strong Seismic Prone Regions. S.G. thanks the URBASIS-EU project (H2020-MSCA- ITN-2018, Grant No. 813137). P.G. thanks LabEx OSUG@2020 (Investissements d’avenir- ANR10LABX56).

Author information

Authors and Affiliations

Contributions

Subash Ghimire: Conceptualization, methodology, data preparation, investigation, visualization, draft preparation. Philippe Guéguen: Conceptualization, investigation, visualization, supervision, review and editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no conflict of interest.

Data and resources

The data used in this study are available in the Database of Observed Damage (DaDO) web-based GIS platform of the Italian Civil Protection Department, developed by the Eucentre Foundation (https://egeos.eucentre.it/danno_osservato/web/danno_osservato?lang=EN, Dolce et al. 2019); the damage data from 2010 Serbia earthquake is available at https://miloskovacevic68.github.io/RELA/; the data from the 2015 Nepal earthquake is accessed from https://eq2015.npc.gov.np/#/, (or can be provided upon request), the data from 2010 Haiti earthquake can be obtained from Ministère des Travaux Publics, Transports et Communication of Haiti.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ghimire, S., Guéguen, P. Host-to-target region testing of machine learning models for seismic damage prediction in buildings. Nat Hazards 120, 4563–4579 (2024). https://doi.org/10.1007/s11069-023-06394-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11069-023-06394-z