Abstract

Infrastructures are critical for the functioning of society. Due to globalization, damages between different components of infrastructure systems can cross international boundaries, resulting in broad economic and social impacts. Hence, it is fundamental to develop powerful tools for the assessment of infrastructure risk, considering a wide spectrum of uncertainties. Past studies covering infrastructure risk assessment are limited to a few countries, partly because for assessing the infrastructure risk, issues due to the complexity of the systems, like paucity and heterogeneity of the data and methods, the consideration of dependencies between components and systems, modeling of ground shaking in terms of scenario and probabilistic approach, taking into account site effects, spatial variability and cross correlation of ground motion at the urban scale, as well as consideration of risk metrics tailored to infrastructure, still remain partially or not properly answered. Moreover, the presently available infrastructure risk assessment tools are not sufficiently illustrative, user-friendly, and comprehensive to meet actual needs. To this end, the paper making an overview of these issues proposes a comprehensive approach that leverages the main strength of existing infrastructure risk methodologies, integrating them into a powerful open-source tool and providing common platform from hazard to risk analysis that will serve for global and easy usage. The methodology and its implementation are illustrated through a test-bed study of the water supply network of the city of Thessaloniki in Greece, considering an Mw 6.5 scenario of the 1978 Thessaloniki earthquake and an event based probabilistic approach and, simultaneously evaluating the sensitivity of cross spatial correlation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Critical infrastructures refer to the assets and systems that are vital for society, and whose damage or destruction can lead to serious consequences to the health, safety, and socio-economic well-being of the population (Council Directive 2008; PCCIP 1997). The leading cause of infrastructure disruption is natural disasters, and their direct impact has been estimated at least $90 billion per year (Hallegatte et al. 2019). Earthquakes like 1994 \({M}_{w}\) 6.7 Northridge, 2010 \({M}_{w}\) 8.8 Maule, 2011 \({M}_{w}\) 9.1 Tohoku, 2011 \({M}_{w}\) 6.2 Christchurch, 2015 \({M}_{w}\) 7.8 Gorkha, and 2017 \({M}_{w}\) 7.1 Puebla are some of the examples which caused serious disruption to the critical infrastructure affecting the lives of many people and producing high economic losses (Evans and McGhie 2011; Giovinazzi et al. 2011; Government of Nepal 2015; Lemnitzer et al. 2021; Nakashima et al. 2014; Todd et al. 1994). Moreover, the current rapid urbanization is estimated to lead to 68% of the total population living in urban areas by 2050 (United Nations 2019), which will increase the reliance of people on infrastructure, potentially exacerbating the impact of future earthquakes (Sathurshan et al. 2022). In today’s world of globalization, we have already realized how the disruption or loss of a system might not be limited to a certain area, and it is likely to propagate directly or indirectly to other parts of the globe (Li et al. 2015). Furthermore, major components of our infrastructure are aging (Van Breugel 2017; Houlihan 1994), which can increase its vulnerability to future events. It is thus fundamental to improve our understanding of infrastructure risk, which can support better mitigation and recovery plans.

Critical infrastructure is spatially characterized as networks, and its components are interlinked and behave as a system. In terms of risk assessment on an urban or regional scale, there are synergies between various intricate infrastructure networks, which generally lead to exacerbating impact. These dependencies can be classified between intra-dependencies (between the various components in the same system) and interdependencies (between the different interacting systems). In this study, we focus on intra-dependencies, leaving the systemic approach of the interdependency between systems as a part of future study.

One of the crucial factors of infrastructure systems such as utility and transportation networks is maintaining connectivity (Pribadi et al. 2021) and serviceability as has been outlined by Dueñas-Osorio et al. (2007), Adachi and Ellingwood (2009), Romero et al. (2010), Cimellaro et al. (2016) for the water supply systems; Cavalieri et al. (2014), Cardoni et al. (2020) for the electric power network; Esposito et al. (2015) for the gas system; Jayaram and Baker (2010) and Argyroudis et al. (2015) for the transportation system. To this end, the European research project SYNER-G is arguably among the most comprehensive efforts to develop an efficient methodology and provide adequate tools for infrastructure risk (Pitilakis et al. 2014a; b).

Despite the importance of infrastructure risk assessment and some important progress already made, an efficient and well-accepted methodology and the tool is still missing as it is demonstrated in the first part of the paper, which offers an overview of the existing tools addressing the advantages and disadvantages of each one. Then in the second and third part of the paper, we address the most important challenges in terms of infrastructure risk: (i) the complexity it possesses behaving as the intricate networks with heterogenous typologies and structural/operational characteristics, (ii) the lack of clear and globally recognized methods, which are simple and easily applicable (De Felice et al. 2022) and, (iii) the missing of a unified tool that enables an integrated approach, including the generation of the seismic event and the source characterization to the connectivity loss of infrastructure network at risk.

The first two challenges are related to the paucity and heterogeneous characteristics of the data, as well as the complexity in modeling ground shaking considering spatial variability of ground motions and cross correlation. In this context, the present study proposes methods and metrics built upon the wealth of prior studies for risk assessment that are thorough yet clear, explicit, and, to the greatest extent possible, consistent with many different kinds of lifelines. To efficiently address the issue of the third challenge and gap of the previous works, the proposed infrastructure risk assessment methodology has been implemented in a widely used open-source tool, the OpenQuake engine (Pagani et al. 2014; Silva et al. 2014).

Finally, in the fourth part, the proposed methodology and tool have been illustrated through an application to a case study considering both scenario-based, and event-based probabilistic analysis for the water supply network of Thessaloniki, Greece. The application of the tool enables various sensitivity analyses in different aspects including the employment of cross spatial correlation which is the prerequisite of the spatially distributed system is also done in this work.

2 Overview of infrastructure risk assessment tools

There are a few infrastructure risk assessment platforms and tools that have been developed in the past years like HAZUS, INCORE, WNTR, and OOFIMS. Table 1 summarizes their most important features. Further description of the prerequisite required for infrastructure seismic risk assessment is provided in the following section.

As seen in Table 1, all these applications are mainly limited to the availability of exposure or hazard analysis to be used globally. HAZUS carry out the implementation mainly at the component level and do not include the connectivity analysis. Water Network Tool for Resilience (WNTR) is an EPANET (https://www.epa.gov/water-research/epanet) compatible tool focusing only on the resilience of water distribution networks (Klise et al. 2017). Interdependent Networked Community Resilience Modeling Environment (IN-CORE) platform developed to support community resilience in case of various extreme events includes the reachability analysis of the various infrastructures (Lee et al. 2019). Object-Oriented Framework for Infrastructure Modeling and Simulation (OOFIMS) developed in the frame of the SYNER-G project (Franchin and Cavalieri 2013; Pitilakis et al. 2014b) allows infrastructure risk assessment of all main physical elements of the built environment including buildings, critical infrastructures, and utility systems in component, connectivity, and functional level. However, the usage of these tools for infrastructure risk assessment is currently limited to some parts of a few countries, mainly due to its complexity and insubstantial seismic hazard library. Considering the aspects of these applications, the main conclusion drawn from this tabular presentation is the need for a common platform that has all the required features to assess infrastructure risk at the system level for global usage.

In the following, we are shortly discussing the various assumptions and simplifications of the different components of the infrastructure risk assessment methodologies and in particular the modelling of the exposure and seismic hazard, the fragility and damage assessment, and finally the risk assessment and connectivity.

3 Overview and outline of the methodology

Infrastructure risk assessment can be done at component, connectivity, serviceability, or functional level. The component level risk assessment requires detailed hazard and vulnerability analyses, considering various fragility functions. Additionally, topology-based network analysis is required to reach the connectivity level analysis. In terms of the infrastructure network, it becomes necessary to check the connection of the system to strategically apply proactive and reactive mitigation measures. For the serviceability or functionality level, various flow-based analyses are also required. Serviceability analysis gives information about the quality of services to the customer and for that, each type of infrastructure requires its own unique methodology and metrics. The risk assessment tool that has been developed within the scope of this work is aimed at connectivity analysis, which is relevant for the majority of infrastructures. The methodology is outlined in Fig. 1 and further elaborated in the following sections.

3.1 Exposure modelling

The abstract representation and spatial characterization of the built environment can be expressed as point-like, area-like, and line-like components. Critical infrastructures can be represented through networks i.e. the composition of nodes/vertices (point-like) and edges/links (line-like), which are interlinked, more commonly with physical links. The networks can be represented as directed or undirected according to the specification of the infrastructure system and the availability of the data. Past studies usually place a single link between the nodes, which might often fail to represent complex systems comprising series or parallel sub links between the nodes (Guidotti et al. 2017). Therefore, to represent almost all possible infrastructure systems (e.g. water supply and waste water systems, electric power network, transportation system), undirected, directed, simple, and multi graph (i.e. more than one edge between two nodes) should be possible as it is the aim of this work.

The paucity of data is one of the main challenges of exposure models. In the case of transportation systems, data can be extracted from open sources like OpenStreetMap. However, for utility systems, data might be easily accessible only to the owners and relevant stakeholders who should be the envisaged users of the risk tool respecting the internally available security and privacy protocols. In general, some inferences can also be made by the visible superstructure, and transportation networks (since utilities generally run under the roadways) to build a simplified network for public usage. Moreover, the footprints, boundaries, and resolutions are the foremost step in regard to the modelling of the infrastructure network which depends on a variety of factors, i.e. availability of data, performance criteria, physical boundaries, and location of strategic components, user demand for the accuracy, and the interdependencies of the components on other systems (Sharma et al. 2021), without excluding the data collection, processing, archiving and computation cost.

3.2 Seismic hazard modeling

Consideration of a single credible earthquake event, termed as scenario based analysis, is popular in risk assessment due to its simplicity. Such assessment can be communicated easily to different stakeholders or communities and used for the validation and verification of models using data from past events. On the other hand, it is possible to assess risk considering all the potential events that might happen in a given region, with the associated rates of occurrence. So, in order to probabilistically compute the risk of spatially distributed systems, operating as networks, and knowing that the damage of any component may lead to indirect loss due to connectivity to other distant components, it should be necessary to establish sets of stochastic events that are probabilistically representative of all potential earthquakes to obtain the simultaneous ground motion intensities. This approach is termed as event based probabilistic seismic hazard analysis (PSHA).

The infrastructure consists of many types of components with distinct dynamic properties and behavior. Consequently, different intensities measures (ΙΜ) might be required for the vulnerability and risk analyses. In the case of transient ground shaking, for the components that can be represented as point elements (e.g., buildings, transmission substations, pumps), peak ground acceleration (PGA) or pseudo-spectral acceleration (SA) are the most common IMs. On the other hand, for linear components like pipelines, peak ground velocity (PGV) is more adequate due to the better correlation with longitudinal ground strains. These intensity measures are calculated using suitable ground motion models (GMMs), which are mainly based on magnitude, source-site distance, fault type, and soil conditions characteristics. Physics-based approaches could be another alternative suitable for scenario based calculations (Smerzini and Pitilakis 2018), but probably less appealing for event based probabilistic analysis due to the computational demand.

When assessing the risk for spatially distributed systems containing multiple elements at risk which are dependent on each other, consideration of cross and spatial correlation of ground motion is fundamental (Jayaram and Baker 2010; Costa et al. 2018; Weatherill et al. 2014; Weatherill et al. 2015). Consideration of cross spatial correlation of different intensity measures, particularly for studies involving PGV, is still limited. Studies like Kongar (2017) and Pitilakis et al. (2014b) have considered the cross correlation model proposed by Weatherill et al. (2014), called the sequential conditional simulation method, which, however, assumes that the ground motion field of secondary IM is conditional only upon primary IM and the correlation between residuals of different IMs is known. Another approach assumes that the correlation structure of different IMs at different locations is Markovian in nature (Worden et al. 2018), i.e., the cross-correlation between IMs is assumed to be conditionally independent of the spatial correlation of the IMs. In this approach, the spatial cross-correlation between two different IMs at different locations is modelled as the product of the spatial correlation of intensity at the two sites based on their separation distance and the cross-correlation of the two IMs at the same location. Additional information about this procedure and mathematical formulations can be found in Silva and Horspool 2019 or Weatherill et al. 2015.

Apart from transient ground shaking, permanent ground deformation (PGD) induced by liquefaction, landslides, or surface fault rupture, should be also included while assessing the risk of extended infrastructure networks, mainly for linear components (e.g., pipelines, roads, bridges, tunnels). Past events such as the 2011 \({M}_{w}\) 9.1 Tohoku earthquake and the \({M}_{w}\) 6.2 Christchurch earthquake, have shown a significant disruption to infrastructure services due to liquefaction. Even though it is significant to be included in the hazard assessment, only a few studies have so far incorporated it in open source tools.

3.3 Fragility analysis and damage assignment

Fragility curves at component or element level serve as a fundamental tool to understand how different components of a system behave during an earthquake. A fragility function is the conditional probability of exceeding a predefined limit state given the intensity measures for each component. In Pitilakis et al. (2014a), existing fragility curves have been documented for various infrastructure systems at the component level. Similar documentation may be found in HAZUS (FEMA 2020). Databases of fragility and vulnerability functions for common typologies of residential, commercial, and industrial buildings already exist and are compatible with open-source tools like for example the OpenQuake-engine (Yepes-Estrada et al. 2016; Martins and Silva 2021), but unfortunately, so far, they do not cover most of the components typically present in infrastructure systems. For the moment, existing functions for infrastructure components can be used for this purpose and if needed, new ones can be elaborated on a case-by-case basis. However, a major problem persists in the adequate selection of appropriate fragility and vulnerability functions for the different components of infrastructure networks. With a few exceptions, their validation with damage data from past earthquakes is rather poor, which inevitably increases the associated uncertainty (Silva et al. 2019; Pitilakis et al. 2014a). Regarding utility systems, the data acquired from the Christchurch earthquake, which had a major impact on utility systems, that allowed the development of vulnerability functions for pipelines considering ground shaking and liquefaction were recently developed (Bellagamba et al. 2019; Kongar 2017).

Having assigned and selected the fragility functions for each element and component of the system, the damage assessment of any critical infrastructure should be first evaluated specifically and locally at the component or asset level. While fragility analysis gives the probability of being in a specified damage state, it is important to deterministically predict the damage of each component of the network in each scenario with induced IMs. For this, specific damage states are assigned to each component of the system by sampling the random variable with respect to damage state probabilities from the fragility function for the estimated IM. The damage state of each asset in every event should be directly computed in a modern and powerful open-source tool of infrastructure risk assessment in order to proceed to the next step of the risk analysis at the connectivity level.

3.4 Risk analysis at connectivity level

In the case of building aggregates at the city scale, the total loss of the portfolio of the buildings can be determined by simply aggregating the losses from the individual component unit. However, in the case of critical infrastructures, it is not straightforward as their components are interdependent and possess the complex network property to behave synergistically. Therefore, it is of utmost importance to incorporate connectivity analysis when implementing a tool for infrastructure risk assessment. To do this, connectivity loss based on a network-based approach or graph theory (Biggs et al. 1986) has been usually selected as the most appropriate way to model efficiently the connectivity loss. As highlighted by Johansen and Tien, (2018) and Ouyang (2014), the effectiveness of network-based approaches can be seen in their ability to assess the network’s capacity to prevent major consequences-causing events, assess the effects of enhancing the absorptive capacities of crucial infrastructure components, and assess how well the design decisions can be supported to identify specific restoration priorities.

Graph theory, capable of revealing meaningful information about the topological architecture of the infrastructure system, is an advantageous approach for connectivity analysis. Starting back from the problems of Konigsberg bridges by Leonard Euler, graph theory has evolved as a strong tool in various fields like social, information, biological, or geographical for the systemic approach (Newman 2003). A graph is made up of nodes/vertices which are connected by links/edges. Graphs can be represented and stored as an adjacency matrix, adjacency list, or incidence matrix. The first two are commonly used in practice, being simpler and requiring less space in terms of handling the sparse graph.

Network analyses is the core of any connectivity analysis. Topology-based methods anticipate the system performance with less amount of data and information relative to flow-based approaches. It is based on network topology, with discrete states for each component generally expressed as at failed, or normal state. Failure of the nodes can happen directly through their damage or indirectly due to the disconnections from the source nodes of the same infrastructure system or failure of a dependent node of another system. Node heterogeneity can be described in terms of supply, demand, transmission, intermediate, or connection. Then appropriate metrics, like connectivity loss, efficiency, or population affected percentages, can all be measured using this technique. By capturing the topological features, this method allows to identify the overall performance of networks, identify critical components, and help in assessing the mitigation measures such as prioritization in case of limited budget and resources, adding in that way redundancies, back up facility, bypass, or strengthening the individual components (Ouyang 2014).

In the urban/regional scale, the networks/graphs are in general connected in normal operating conditions. A graph is said to be connected only if there is a path from all vertices to any other vertices in the graph. Due to the disturbances caused by earthquakes or any other hazard, there might be a loss of connection of demand nodes from supply due to the damage on some components and the dependencies among them. This is checked through connectivity analysis based on topology-based network analysis. In practice this analysis allows us to ensure that the population still has access to basic services including water, electricity, or health care after the extreme events. To check this, the original “undamaged” graph, formed from the exposure model, should be updated following a seismic event according to the reached or exceeded damage state of each component. This is done by removing the component that has failed from the damage analysis. Then, the connection of this updated graph may be examined using various graph theory algorithms. For example, according to the required performance assessment, it can be done by updating the adjacency matrix, analyzing the connected components, or/and using algorithms like the breadth-first search algorithm or depth first search algorithm (Cormen et al. 2022) to traverse the path and Dijkstra algorithm (Dijkstra 1959) to compute the shortest path. With the graph theory approach, connectivity at the global, as well as local or nodal level, may be easily and efficiently computed. These analyses allow us to understand the performance of cities as a whole in case of earthquakes to implement better mitigation and recovery plans. Also, the efficiency of supply nodes and the isolation of particular demand nodes can be determined in case of earthquake events. This is significantly important to the nodes that serve critical facilities like hospitals, schools, administration buildings, and firefighting stations.

A critical parameter describing the efficiency and sufficiency of any connectivity loss assessment is the selection of adequate metrics to evaluate the performance of the system. In a way to be uniformly applied to the majority of the components of an infrastructure network, appropriate performance metrics and connectivity models have been chosen. These metrics include the model for simple connectivity loss estimation, designated as Complete Connectivity Loss \((CCL)\) and Partial Connectivity Loss \((PCL)\) in this study for a clear understanding, and the model for Weighted Connectivity Loss \((WCL)\) (Albert et al. 2000; Argyroudis et al. 2015; Franchin and Cavalieri 2013; Pitilakis et al. 2014b; Poljanšek et al. 2012). Additionally, loss metrics, namely nodal efficiency and global efficiency loss, which is very convenient in real-world network problems is also introduced.

-

(i)

Complete Connectivity Loss (CCL) In normal conditions, the network is usually described with a connected graph i.e., the path existing between every pair of nodes and so forth, between supply and demand nodes. After the earthquake event, some components might be isolated and not operational or damaged, whose removal might result in the fragmentation of the originally connected graph. In this case, some of the demand nodes might have no path or connection to any of the supply nodes at all. This is evaluated by \(CCL\) to check the pure connectivity and is calculated by the following formula:

$$CCL = 1 - \frac{{CN_{s} }}{{CN_{o} }},$$(1)

where \({CN}_{s}\) is the number of connected demand nodes after a seismic event, and \({CN}_{o}\) is the number of connected demand nodes before the seismic event. Connected demand nodes in this case mean at least connected to one source node. This is helpful to identify the worst-case scenario when the nodes could be completely isolated. For example, it helps to identify a settlement that will not have any access to hospitals or identify schools with no water supply at all after an earthquake event. The example is illustrated in Fig. 2, with three supply nodes and three demand nodes, namely S(1–3) and D(1–3), respectively. Before a seismic event, all demand nodes are connected to supply nodes, but after the event, D1 will be disconnected from all supply nodes, resulting in a \(CCL\) value of 33.3% for the system.

-

(b)

Partial Connectivity Loss (PCL) To compute this metric, firstly in the original network, for each demand node, the number of sources connected to it is computed. After doing the damage assessment, and updating the network by removing the non-operational components, the number of source nodes connected to each demand node is verified again, and the metric \({PCL}_{i}\) at nodal level and \(PCL\) of overall network is calculated as follows:

$$PCL_{i} = 1 - \frac{{N_{s}^{i} }}{{N_{o}^{i} }}{ ,}$$(2)$$PCL = 1 - \left\langle {\frac{{N_{s}^{i} }}{{N_{o}^{i} }}} \right\rangle ,$$(3)where < > denotes averaging over all demand nodes, \({N}_{s}^{i}\) the number of sources connected to the ith demand node after the seismic event, and \({N}_{o}^{i}\) is the number of sources connected to the ith demand node before the seismic event. Based on the connectivity, this metric gives the measures of the average reduction in the ability of demand nodes to receive flow from services. Even though this metric is based on topology, it indirectly gives an insight of the quality of the service to each demand node too. As an illustration, from Fig. 2, \(PCL\) of the network is 77.76%, and locally the loss of connectivity is 100%, 66.67%, and 66.67% for D1, D2, and D3 respectively.

-

(iii)

Weighted Connectivity Loss (WCL) This metric upgrades the \(PCL\) by including the weightage of the edges in the computation. Weights could represent the range of selections like the distance between two nodes, travel time, cost, and importance factor to the nodes or settlements it connects. This is beneficial in many cases. For instance, in dense urban road networks, where the loss of total connection might be rare, weighted connectivity loss would aid to supplement the connectivity loss with the increased travel time or distance travelled. Similarly, in the case of a utility system, there might be a situation where the region of densely populated areas must be given more emphasis. \({WCL}_{i}\) at nodal level and \(\mathrm{WCL}\) for the overall network can be computed as:

$$WCL_{i} = 1 - \frac{{N_{s}^{i} W_{s}^{i} }}{{N_{o}^{i} W_{o}^{i} }}{ }$$(4)$${\text{WCL}} = 1 - \left\langle {\frac{{N_{s}^{i} W_{s}^{i} }}{{N_{o}^{i} W_{o}^{i} }}} \right\rangle$$(5)where, the weights \({W}_{s}^{i}\) and \({W}_{o}^{i}\) can be defined in different ways. For example, in transportation systems, the weights can be calculated as follows:

$$W^{i} = \mathop \sum \limits_{j,j \ne i} I_{ij} .\frac{1}{{TT_{ij} }}$$(6)where \({I}_{ij}\) is equal to 1 when the path exists between the ith and jth nodes, and 0 otherwise. \({TT}_{ij}\) is the travel time of the path between the ith demand and the jth source. We can take an example network of a simple road (Fig. 3) with A, B, and C as traffic analysis zone (TAZ) referring the weightage of every edge to be travel time. After the event, overall WCL is 19.5%, and the \(WCL\) for every TAZ i.e. for A, B, and C is 30.8%, 27.7%, and 0%.

-

(d)

Nodal and Global Efficiency Loss (\(Eff(loss))\) Computation of efficiency (Latora and Marchiori 2001; Latora and Marchiori 2003) is a robust metric that has been used in network analysis for communication, neural or real-world graphs, including infrastructure systems as it can handle unweighted and weighted, connected and disconnected, undirected and directed and also the dense and sparse graph. This has been chosen as it is more generalized and avoids the specification of demand and supply nodes in case of scarce data. This is also important when every node is vital (e.g., accessibility to hospitals from each point of the city (nodal efficiency) or the connectivity between the important institutional buildings in a city (global efficiency)).

The nodal efficiency of each node \(i\) is defined as the normalized sum of the reciprocal of the shortest path length from node to all other nodes \(j\) in a graph.

where \(N\) is the number of nodes, and \({d}_{j}\) is the shortest path length between node i and node j. Hub nodes tend to have the highest nodal efficiency. Global efficiency is the mean of all nodes’ nodal efficiency and is defined as inversely proportional to the average shortest path between all nodes of graph G.

The maintenance and degradation of the efficiency of facilitation by each node as well as the overall network due to damage to the component and eventually loss of connection can be evaluated using the normalized efficiency loss metric, given by,

where \({E}_{s}\) and \({E}_{o}\) are the efficiency after and before the seismic event. For the demonstration, a simple weighted graph, the original network and the disturbed one due to an earthquake event along with their nodal efficiency loss are shown in Fig. 4 (left and right respectively). Global efficiency decreased from 0.32 to 0.24 and global efficiency loss is 26% approximately.

Different metrics can be used according to network topology, the type of evaluation, and results needed by different stakeholders operating and managing different systems (i.e. water supply, electric power network, transportation, natural gas system, and others).

4 Implementation of infrastructure risk in OpenQuake

The precedented short review allows us to demonstrate the complexity that an infrastructure system possesses heterogenous typologies and structural/operational characteristics for its component and element, and the need for a globally recognized simple and easily applicable methodology and tool. Moreover, it allowed us to elaborate the main components of an efficient methodology to implement it in a powerful tool capable to perform in a unified and comprehensive manner the whole infrastructure risk assessment, all the way from the generation of the seismic event and the source characterization to the connectivity loss of infrastructure network at risk.

The engine that has been used for this purpose is OpenQuake (https://github.com/gem/oq-engine). The OpenQuake engine is an open-source tool for integrated seismic hazard and risk analyses that is now more and more universally used (Pagani et al. 2014; Silva et al. 2014; Hosseinpour et al. 2021), a fact which is crucial in case of wide applications of infrastructure risk.

Among its main advantages which fit very well to the requirements of this work [i.e. challenges (i) and (ii)] is that it allows both scenario and event based probabilistic seismic hazard and risk analyses. Moreover, it incorporates recently published global hazard and risk models which is a decisive advantage and initiative of using this engine and tool for disaster risk reduction (Pagani et al. 2020; Silva et al. 2020). Another incontrovertible reason for infrastructure risk to be implemented in OpenQuake is the presence of a large hazard library consisting of more than 250 ground motion models (GMMs). The combination of ShakeMaps produced by USGS adopted by many seismic network operators is also possible with OpenQuake (Silva and Horspool 2019). Spatial correlation of ground motion and cross intensity measure correlations that are a prerequisite for seismic hazard and risk assessment of spatially distributed systems are also available with the OpenQuake engine.

In terms of damage and vulnerability assessment of different kinds of elements at risk, there are already powerful libraries for buildings, which are an undoubtedly inevitable part of the built environment to reach systemic risk assessment. A collection of hundreds of empirical and analytical fragility functions have already been introduced and are made publicly available through its platform (D’Ayala et al. 2015; Martins and Silva 2021). The library for other elements at risk i.e. bridges is also extensively enriching. In the frame of the present work, specific damage and vulnerability functions have been proposed together with adequate connectivity and performance metrics.

Based on these advantages it is expected that the implementation of an efficient infrastructure risk module in the OpenQuake engine will provide a uniform and powerful platform allowing also the systemic analysis of systems considering intra and interdependencies. The implementation made herein takes on one hand, advantage from the advanced tools and methodology developed in the past developed models and methodologies mainly in SYNER-G, and on the other hand, the capacities offered by the OpenQuake engine. This will allow further improvement of certain parts of the existing methodology, for example with regard to the hazard assessment and the selection of appropriate metrics, which is important to gain wide acknowledgment and applicability.

For the implementation of the proposed integrated methodology for the infrastructure risk to provide a common platform, the abstracted concept and methodology is coded in Python, which is coherent with the OpenQuake engine. NetworkX (Hagberg et al. 2008) is one of the main Python packages used in coding to implement the infrastructure risk.

In the following, we shortly present the different components of the module for the computation of infrastructure risk at the connectivity level in the OpenQuake engine.

Exposure modelling Exposure models for infrastructure risk assessment contain the location and taxonomy linked with fragility/vulnerability class of the components, as well as their function within the system (i.e., demand or source for nodes), and how they connect with each other (i.e., for edges). Generally, they are specified as “supply nodes” and “demand nodes”, though it is possible to assign both functions to a single node when an element behaves as both as source and demand (e.g., traffic analysis zone in transportation networks—Argyroudis et al. 2015). With this information, the original network of the required type is created in a format compatible with the OpenQuake-engine. The type of graph i.e. simple (directed or undirected), multi (undirected or directed) are also specified accordingly. For the evaluation of metrics that need weightage, the weights are given to edges in terms of the importance factor related to various measures i.e. travel time, cost, distance, or other relevant measures. The definition of demand nodes may vary according to the user requirement and the computational cost. In the case of utilities, demand nodes may be individual households or the end of the main/submains. In case of a lack of data, other nodes except supply nodes may also be assigned as demand nodes. Similarly, in the case of a transportation system, the demand nodes may be the traffic analysis zone from the survey, the centroid of the main settlements, the end of main arterial or sub-arterial streets, or a critical facility like a hospital, a fire-fighting station, or a school.

Seismic hazard modelling OpenQuake already disposes a powerful comprehensive module for hazard analysis to compute various intensity measures (IMs), considering the cross spatial correlation. It is possible to run both scenario and event based probabilistic seismic hazard analysis suitable for infrastructure risk.

Fragility, damage, and operationality assessment While defining the fragility model in OpenQuake, it is possible either through discrete function or cumulative lognormal distribution function. Generally, in the case of nodes, the selected fragility curves are comparable to the ones of buildings. However, for the edges which are in general empirically evaluated, the computation might not be straightforward. For instance, fragility of the pipelines represented as edges may depend on both the length and selected IMs, i.e. PGV or PGD, and assume to follow Poisson’s distribution (Hwang et al. 1998). In this case, some preprocessing is required, and the curves are expressed as discrete functions. Also, logic trees consisting of different IMs with respective weightage are applied in cases where more than one IMs come into play for a specific component.

Knowing the damage state for each component with the help of fragility or/and vulnerability functions, the operationality of the components of the system is verified by the selected consequence model. For instance, the model might dictate that until slight or moderate damage the component is still operational but deemed as non-operational for extensive or complete damage. It is worth mentioning that there is also the possibility to reduce the capacity of the component, as opposed to binary states (i.e., operational or non-operational).

Infrastructure risk assessment After determining the operationality of each component, the information is updated to the attribute of the network built originally from the exposure model. The non-operational component is then removed from this network. With this post-event damaged network, the new network is analyzed again and the overall connections to the nodes in the exposure model are checked. Accordingly, various performance metrics as explained in Sect. 3.4 are computed according to the type of network, topology, availability of data, and user requirement.

The proposed framework and implementation of infrastructure risk assessment based on connectivity analysis can be tailored to various scales, including rural, urban, regional, national, and international scale, and customized with different level of details based on the user demand, data availability and computational capacity. To showcase its versatility, we applied our approach to assess the urban water supply system in Thessaloniki, but it could be also applied in a cross-border network applying the same powerful tool.

5 Illustrative application

To demonstrate the proposed methodology and tool, a realistic case study for the water supply system of Thessaloniki, the second largest city in Greece, is considered. The performance of the water supply system normally includes water delivery, quality, quantity, fire protection, and functionality (Davis 2014). Assurance of good performance of the water system is the most critical aspect to be ensured in case of an earthquake event. Also, the failure of the water supply system produces a huge cascading loss to the other systems as well (Mazumder et al. 2021). To ensure better prevention and prioritize the maintenance of various components in constrained resources and budget, risk analysis at the system level is vital. In the case study presented, both scenario based and probabilistic analysis are applied. The mean annual frequency curve using the recently released European Seismic Hazard Model 2020 (ESHM2020) (Danciu et al. 2021) in case of connectivity loss has been computed. Furthermore, the sensitivity of the cross spatial correlation has also been analyzed.

5.1 Exposure

Water in Thessaloniki is supplied from various sources including rivers, boreholes, and springs. The supply capacity ranges from 240,000 to 280,000 m3/day and the total supplied population is about one million people. A simplified water distribution system for Thessaloniki, which covers pumping stations, tanks, pipelines, and demand nodes is shown in Fig. 5. The construction materials of pipelines included asbestos cement, welded steel, PVC (Polyvinyl chloride), and cast iron. Further details about the water distribution network can be found in Pitilakis et al. (2014b).

(© OpenStreetMap Contributors 2017)

Water supply network of Thessaloniki (Pitilakis et al. 2014b [redrawn]), base map layer is available from https://www.openstreetmap.org/copyright

5.2 Hazard assessment

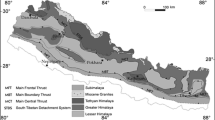

For the earthquake scenario, a rupture simulating the 1978 \({M}_{w}\) 6.5 Thessaloniki earthquake was considered, as proposed by Roumelioti et al. (2007). We applied ground motion model for active shallow crustal regions by Akkar and Bommer (2010). For the event based probabilistic hazard analysis, ESHM20 model was used. Some ground motion models that were unimportant to Thessaloniki and did not produce the required PGV as one of the intensity metrics, were eliminated from the logic tree of the models. The local soil model has been considered according to available microzonation studies (Anastasiadis et al. 2001). For the cross spatial correlation, the second approach mentioned in Sect. 3.2 is used in the current study, where the cross-correlation between different IMs is modelled using the parametric cross-correlation model proposed by Bradley (2012) for PGV, PGA, SA, acceleration spectrum intensity (ASI), and spectrum intensity (SI), and the spatial correlation model by Jayaram and Baker (2009), both of which are implemented in the OpenQuake-engine. Median PGA and PGV values from the simulation for the scenario considering the cross spatial correlation are shown in Fig. 6.

5.3 Fragility and vulnerability functions

We used the fragility function for buried pipelines proposed by ALA (2001) which relates repair rate (RR) for given PGV and PGD. ALA (2001) is considered to be more credible as it includes data from a larger set of data from different geographical regions (Makhoul et al. 2020). The relation formulated in SI units (Pitilakis et al. 2014a) is given by the following relation.

where \({K}_{1}\), \({K}_{2}\) are used to adjust the value based on the material type, connection type, soil type, and pipe diameter (ALA 2001). The repair can be due to leaks or breaks. HAZUS (FEMA 2020), suggests that 80% of the repair rate will be due to breaks and 20% will be due to leaks in case permanent ground displacements (PGD) and vice versa for PGV. However, susceptibility to liquefaction induced hazard has not been considered in the present work. The interval between repairs is assumed to follow a Poisson distribution with a rate equal to the calculated mean repair rate. The probability of failure that an individual pipe has failed is calculated considering at least one repair irrespective of the break or leak. The probability of failure is given by,

where L is the length of the pipelines. The pipeline lengths in the testbed were categorized into groups based on appropriate interval range of length. Then, combining Eqs. (10) and (12), probability of failure is computed for various categorized lengths considering their mean for respective PGV and the fragility curves are expressed in discrete form in the OpenQuake platform. The fragility curve for the pumping stations has been taken into account from SRMLIFE (2007) which can be referred in Kakderi and Argyroudis 2014. The pumping stations are regarded as anchored low-rise R/C building with high level seismic design. The tanks are also considered to be anchored and the fragility curves are taken from the HAZUS (FEMA 2020).

5.4 Connectivity analysis

The connectivity analysis is performed according to the methods described in Sect. 3.4, and the performance metrics are computed accordingly. For the current case study of the water supply system, we decided that \(CCL\) and \(PCL\) are of interest. Since weightage is not considered for the moment in our module and computation, \(WCL\) is not relevant. Moreover, with the distinction between demand and supply, and weightage not being considered, \(Eff(loss)\) is also not relevant.

In order to consider uncertainties in various steps from hazard characterization to computation of connectivity loss, Monte Carlo simulation (MCS), is incorporated in the framework. In case of the scenario based analysis, 1000 simulations are considered. The cumulative moving average for \(CCL\) and \(PCL\) with and without cross spatial correlation is shown in Fig. 7. The mean value of the \(CCL\) is found to be 0.018 and 0.015 respectively. Along with the overall connectivity loss of water for the case study area, among the number of simulations, the percentage of total isolation of each node locally has been checked. The probability of isolation of each demand node considering the total number of simulations with and without cross spatial correlation is given in Fig. 8. This type of analysis helps to ensure the connection to the critical nodes that consist of hospitals, fire-fighting stations, schools, temporary shelters etc. Some variations are seen due to the cross spatial correlation in the nodal level. Additionally, it is interesting to check the loss of water at the district level to check which part is most vulnerable and in case of disruption in emergency condition, estimates of redundant water supply, extra water tanks, and mobile waters tankers can be done. For this, we utilized population data for each district provided by the census making the assumption that population is uniformly distributed across the area independently of the time throughout the year. We then abstracted demand nodes within each district and evaluated the mean connectivity loss for each district, considering only the demand nodes within that particular district. To estimate the potential effect on the population, we calculated the product of the mean connectivity loss and the population size of each district. The population map at the district level and the projection of the number of people affected with the complete loss of water supply according to \(CCL\) is shown in Fig. 9. From this figure, it can be seen that the affected population is greater in the southeastern part. This type of analysis can also be done at a reduced discrete level if the detailed network is accessible.

The mean value of \(PCL\) is found to be 0.107 and 0.130 with and without cross spatial correlation. In this case, the mean value is greater than \(CCL\) for the obvious reason that \(PCL\) considers equally the importance of each source that is connected. The loss of connectivity, \(PCL\) of each demand node is shown in Fig. 10. More variation is seen at the nodal level with the consideration of cross spatial correlation.

Regarding the consideration of cross spatial correlation for both at urban scale (Fig. 7) and at nodal level (Figs. 8, 10), it can be seen that \(PCL\) is more sensitive than \(CCL\). This is because the \(CCL\) considers its connectivity to just any one of the sources while the topology of the water distribution system of Thessaloniki is more grid like which possesses more redundancies. Unlike this, \(PCL\) evaluates its connectivity to each source, which means the incorporation of a greater number of paths and vertices during computation than \(CCL\).

For event based probabilistic analysis, the mean annual frequency (MAF) of exceedance curve for \(CCL\) and \(PCL\) with and without the cross spatial correlation is shown in Fig. 11. As expected, since \(PCL\) takes into account all sources, its value of exceedance is higher than \(CCL\). For the return period of 500 years, the value of corresponding \(CCL\) and \(PCL\) is 0.11 and 0.97 approximately. Also, from the Fig. 11, it can be seen that for a more grid like network, overlooking cross spatial correlation seems to underestimate more the values in case of \(PCL\). For example, for a return period of 100 years, increment in the exceedance of connectivity loss without and with cross spatial correlation varies from 0.6 to 0.8. This means that the average reduction of service to the demand nodes from supplies will be estimated to be 20% less without the consideration of cross spatial correlation. In general, it is observed that in the case of the Thessaloniki water system, the cross spatial correlation can play a significant role and impact.

With this, we showed that the implementation is helpful to evaluate the performance of the infrastructure considering the systemic approach at global, nodal or the district level. This will help the decision makers and stakeholders to carry out various mitigation (ex-ante) and responsive (ex-post) measures. For the prioritization of strengthening or retrofitting the various components, several approaches can be taken into consideration such as a correlation analysis between the damage data of the components and the connectivity loss. This will allow us to identify which components are most strongly associated with connectivity loss and prioritize their upgrading/retrofitting accordingly. Another approach could be a sensitivity analysis to determine how changes in each component of water supply systems’ damage status affect the overall connectivity loss of the system. By ranking the pipelines based on their own damageability and sensitivity scores, we can then identify the most critical components that have the highest impact on the system's connectivity. Also, it is worth mentioning that strengthening of the components according to its sensitivity to connectivity loss might not be always the optimum solution, which should also account the feasibility issues and specific cost benefit analysis. If the components lie beneath the unfeasible location within busy areas, and historical centers, it might be better to add an alternate bypass to the nodes of importance. Therefore, some optimization techniques or Bayesian network approach for decision support as highlighted in the study of Filippini and Silva (2014), Johansen and Tien (2018), Kameshwar et al. (2019) can be used.

6 Conclusions

The aim of this work is to introduce an updated methodology to assess infrastructure risk within a powerful platform to allow easy and global usage. The paper first shortly discusses the features of various existing tools that assist the infrastructure risk. Then it provides a methodology and metrics built upon previous work that are uniform and captures the majority of the infrastructures at the system level, discussing the various underlying prerequisite, issues, and challenges regarding it. Aiming for extensive and easy usage and global application, the proposed infrastructure risk at the connectivity level model has been implemented to the open tool for seismic loss assessment, namely the OpenQuake engine. As an illustration, the tool has been applied to the testbed of the water supply system of Thessaloniki, Greece.

The main contributions of the present work are highlighted as follows.

-

The main issues regarding challenges of risk assessment of critical infrastructures at a system level and the review of some existing robust tools for infrastructure risk assessment have been shortly presented and discussed. As a weakness of previous works, it has been perceived that the inherent complexity of infrastructure risk assessment and the lack of a common platform from hazard characterization to system level risk assessment have constrained globally the extent of infrastructure risk assessment.

-

The methodology and metrics built upon the wealth of previous work that are uniform and can capture the majority of the infrastructure systems have been proposed. Targeting worldwide and extensive usage, infrastructure risk assessment consistent with the presented methodology has been incorporated into a contemporary globally used open tool for seismic loss assessment, namely, the OpenQuake engine. The main advantage of implementation is the already existing powerful strong seismic hazard capabilities, and database for fragility functions for buildings which will greatly aid in reaching the systemic risk considering the interdependencies. As of now, different stakeholders can now have the common platform from earthquake source modelling, risk analysis at the component level to risk analysis of infrastructures at the connectivity level analysis considering intra-dependencies.

-

To demonstrate the capabilities of the elaborated tool, we selected as a testbed, the water distribution system of Thessaloniki in Greece. We considered both scenario and event based probabilistic based analysis and computed the suitable performance metrics. The performance was checked at the city scale, district level, and nodal level, considering the system behavior. This type of analysis may assist the decision makers for initiation toward proactive and reactive measures for effective disaster risk management.

-

The sensitivity of cross spatial correlation in relation to various performance metrics was also studied. For the case of more grid like system like the water distribution system of Thessaloniki, partial connectivity loss is more sensitive to cross spatial correlation compared to complete connectivity loss.

It is hoped that this implementation will promote the development of models for cross spatial correlation that take into account various intensity measures and also the fragility models for various infrastructure system components. Eventually, the next step of this work will consider interdependencies between various systems and flow-based approaches which are very important for the infrastructure risk assessment as they generally increase the impact and loss. Furthermore, the future study shall also entail correlation and sensitivity analyses, taking into consideration feasibility studies that can help in specific prioritization and mitigation actions through in-depth evaluation for potential risk reduction.

Data Availability

Not applicable.

Code Availability

For the implementation of the critical infrastructure risk to OpenQuake, the coding was done in PYTHON mainly including the Networkx library along with others. All calculations have been undertaken with the OpenQuake Engine, an open source software for hazard and risk assessment that is available from: https://github.com/gem/oq-engine. Some maps were generated from PYTHON and QGIS.

Change history

26 May 2023

A Correction to this paper has been published: https://doi.org/10.1007/s10518-023-01707-w

References

Abrahamson NA, Silva WJ, Kamai R (2014) Summary of the ASK14 ground motion relation for active crustal regions. Earthq Spect 30:1025–1055. https://doi.org/10.1193/070913EQS198M

Adachi T, Ellingwood BR (2009) Serviceability assessment of a municipal water system under spatially correlated seismic intensities. Comput Aided Civ Infrastruct Eng 24:237–248. https://doi.org/10.1111/j.1467-8667.2008.00583.x

Akkar S, Bommer JJ (2010) Empirical equations for the prediction of PGA, PGV, and spectral accelerations in Europe, the Mediterranean region, and the middle east. Seismol Res Lett 81:195–206. https://doi.org/10.1785/gssrl.81.2.195

Albert R, Jeong H, Barabási A-L (2000) Error and attack tolerance of complex networks. Nature 406:378–382. https://doi.org/10.1038/35019019

American Lifelines Alliance (ALA) (2001) Seismic fragility formulation for water systems, Part 1-Guidelines, ASCE-FEMA, United States of America

Anastasiadis A, Raptakis D, Pitilakis K (2001) Thessaloniki’s detailed microzoning: subsurface structure as basis for site response analysis. Pure Appl Geophys. https://doi.org/10.1007/PL00001188

Argyroudis S, Selva J, Gehl P, Pitilakis K (2015) Systemic seismic risk assessment of road networks considering interactions with the built environment. Comput Aided Civ Infrastruct Eng 30(7):524–540. https://doi.org/10.1111/mice.12136

Atkinson GM, Boore DM (1995) Ground-motion relations for eastern North America. Bull Seismol Soc America 85:17–30. https://doi.org/10.1785/BSSA0850010017

Baag C-E, Chang S-J, Jo N-D, Shin J-S (1998) Evaluation of seismic hazard in the southern part of Korea. In: Proceedings of the second international symposium on seismic hazards and ground motion in the region of moderate seismicity. Reported in Klise et al Earthq Spect (2017)

Bellagamba X, Bradley B, Wotherspoon L, Hughes M (2019) Development and validation of fragility functions for buried pipelines based on canterbury earthquake sequence data. Earthq Spectra 35(3):1061–1086. https://doi.org/10.1193/120917EQS253M

Biggs N, Lloyd EK, Wilson RJ (1986) Graph theory, 1736–1936. Oxford University Press

Boore DM, Atkinson GM (2008) Ground-motion prediction equations for the average horizontal component of PGA, PGV, and 5%-damped PSA at spectral periods between 0.01 s and 10.0 s. Earthq Spect 24:99–138. https://doi.org/10.1193/1.2830434

Bradley BA (2012) Empirical correlations between peak ground velocity and spectrum-based intensity measures. Earthq Spect 28(1):37–54. https://doi.org/10.1193/1.3675582

Campbell KW, Bozorgnia Y (2014) NGA-West2 ground motion model for the average horizontal components of PGA, PGV, and 5% damped linear acceleration response spectra. Earthq Spect 30:1087–1115. https://doi.org/10.1193/062913EQS175M

Cardoni A, Cimellaro GP, Domaneschi M, Sordo S, Mazza A (2020) Modeling the interdependency between buildings and the electrical distribution system for seismic resilience assessment. Int J Disaster Risk Reduct 42:101315. https://doi.org/10.1016/j.ijdrr.2019.101315

Cavalieri F, Franchin P, Buriticá Cortés JAM, Tesfamariam S (2014) Models for seismic vulnerability analysis of power networks: comparative assessment. Comput Aided Civ Infrastruct Eng 29:590–607. https://doi.org/10.1111/mice.12064

Chiou BS-J, Youngs RR (2014) Update of the Chiou and Youngs NGA model for the average horizontal component of peak ground motion and response spectra. Earthq Spect 30:1117–1153. https://doi.org/10.1193/072813EQS219M

Cimellaro GP, Tinebra A, Renschler C, Fragiadakis M (2016) New resilience index for urban water distribution networks. J Struct Eng 142:C4015014. https://doi.org/10.1061/(ASCE)ST.1943-541X.0001433

Cormen TH, Leiserson CE, Rivest RL, Stein C (2022) Introduction to algorithms, 4th edn. MIT Press

Costa C, Silva V, Bazzurro P (2018) Assessing the impact of earthquake scenarios in transportation networks: the Portuguese mining factory case study. Bull Earthquake Eng 16:1137–1163. https://doi.org/10.1007/s10518-017-0243-2

Council Directive (2008) 114/EC on the identification and designation of European critical infrastructures and the assessment of the need to improve their protection. Off J Eur Union 23:2008

D’ayala D, Meslem A, Vamvatsikos D, Porter K, Rossetto T, Silva V (2015) GEM guidelines for analytical vulnerability assessment of low/mid-rise buildings. Global Earthquake Model. www.nexus.globalquakemodel.org/gem-vulnerability/posts/

Danciu L, Nandan S, Reyes C, Basili R, Weatherill G, Beauval C, Rovida A, Vilanova S, Şeşetyan K, Bard PY, Cotton F (2021). The 2020 update of the European seismic hazard model: model overview. EFEHR Technical Report 001, v1. 0.0

Davis CA (2014) Water system service categories, post-earthquake interaction, and restoration strategies. Earthq Spectra 30:1487–1509. https://doi.org/10.1193/022912EQS058M

De Felice F, Baffo I, Petrillo A (2022) Critical infrastructures overview: past present and future. Sustainability 14:2233. https://doi.org/10.3390/su14042233

Dijkstra EW (1959) A note on two problems in connexion with graphs. Numer Math 1:269–271. https://doi.org/10.1007/BF01386390

Dueñas-Osorio L, Craig JI, Goodno BJ (2007) Seismic response of critical interdependent networks. Earthq Eng Struct Dyn 36:285–306. https://doi.org/10.1002/eqe.626

Esposito S, Iervolino I, d’Onofrio A, Santo A, Cavalieri F, Franchin P (2015) Simulation-based seismic risk assessment of gas distribution networks. Comput Aided Civ Infrastruct Eng 30:508–523. https://doi.org/10.1111/mice.12105

Evans NL, McGhie C (2011) The performance of lifeline utilities following the 27th February 2010 Maule Earthquake Chile. In: Proceedings of the ninth Pacific conference on earthquake engineering building an earthquake-resilient society, pp 14–16

FEMA (2020) Hazus Earthquake Model User Guidance

Filippini R, Silva A (2014) A modeling framework for the resilience analysis of networked systems-of-systems based on functional dependencies. Reliab Eng Syst Saf 125:82–91. https://doi.org/10.1016/j.ress.2013.09.010

Franchin P, Cavalieri F (2013) Seismic vulnerability analysis of a complex interconnected civil infrastructure. In: Handbook of seismic risk analysis and management of civil infrastructure systems. Woodhead Publishing, pp 465–514

Giovinazzi S, Wilson TM, Davis C, Bristow D, Gallagher M, Schofield A, Villemure M, Eidinger J, Tang A (2011) Lifelines performance and management following the 22 February 2011 Christchurch earthquake, New Zealand: highlights of resilience. Bull New Zealand Soc Earthquake Eng. https://doi.org/10.5459/bnzsee.44.4.402-417

Government of Nepal (2015) Post disaster needs assessment. Nepal Planning Commission, Kathmandu

Guidotti R, Gardoni P, Chen Y (2017) Network reliability analysis with link and nodal weights and auxiliary nodes. Struct Safety 65:12–26. https://doi.org/10.1016/j.strusafe.2016.12.001

Hagberg AA, Schult DA, Swart PJ (2008) Exploring network structure, dynamics, and function using NetworkX. In: Varoquaux G, Vaught T, Millman J (eds) Proceedings of the 7th Python in Science Conference (SciPy2008). Pasadena, CA, USA, pp 11–15

Hallegatte S, Rentschler J, Rozenberg J (2019) Lifelines: the resilient infrastructure opportunity. World Bank Publications

Hosseinpour V, Saeidi A, Nollet M-J, Nastev M (2021) Seismic loss estimation software: a comprehensive review of risk assessment steps, software development and limitations. Eng Struct 232:111866. https://doi.org/10.1016/j.engstruct.2021.111866

Houlihan B (1994) Europe’s ageing infrastructure: politics, finance and the environment. Util Policy 4(4):243–252. https://doi.org/10.1016/0957-1787(94)90015-9

Hwang HHM, Lin H, Shinozuka M (1998) Seismic performance assessment of water delivery systems. J Infrastruct Syst 4:118–125. https://doi.org/10.1061/(ASCE)1076-0342(1998)4:3(118)

Jayaram N, Baker JW (2009) Correlation model for spatially distributed ground-motion intensities. Earthq Eng Struct Dyn 38(15):1687–1708. https://doi.org/10.1002/eqe.922

Jayaram N, Baker JW (2010) Efficient sampling and data reduction techniques for probabilistic seismic lifeline risk assessment. Earthq Eng Struct Dyn 39(10):1109–1131. https://doi.org/10.1002/eqe.988

Johansen C, Tien I (2018) Probabilistic multi-scale modeling of interdependencies between critical infrastructure systems for resilience. Sustain Resilient Infrastruct 3:1–15. https://doi.org/10.1080/23789689.2017.1345253

Kakderi K, Argyroudis S (2014) Fragility functions of water and waste-water systems. In: Pitilakis K, Crowley H, Kaynia AM (eds) SYNER-G: typology definition and fragility functions for physical elements at seismic risk: buildings, lifelines, transportation networks and critical facilities. Springer, Dordrecht, pp 221–258

Kameshwar S, Cox DT, Barbosa AR et al (2019) Probabilistic decision-support framework for community resilience: incorporating multi-hazards, infrastructure interdependencies, and resilience goals in a Bayesian network. Reliab Eng Syst Saf 191:106568. https://doi.org/10.1016/j.ress.2019.106568

Kawashima K, Aizawa K, Takahashi K (1984) Attenuation of peak ground motion and absolute acceleration response spectra. In: Proceedings of Eighth World Conference on Earthquake Engineering, volume II. Reported in Klise et al (2017)

Klise KA, Bynum M, Moriarty D, Murray R (2017) A software framework for assessing the resilience of drinking water systems to disasters with an example earthquake case study. Environ Model Softw 95:420–431. https://doi.org/10.1016/j.envsoft.2017.06.022

Kongar I (2017) Seismic risk assessment of complex urban critical infrastructure networks. Doctoral dissertation, UCL University College London

Latora V, Marchiori M (2001) Efficient behavior of small-world networks. Phys Rev Lett 87(19):198701. https://doi.org/10.17877/DE290R-11359

Latora V, Marchiori M (2003) Economic small-world behavior in weighted networks. Eur Phys J B Condens Matter Complex Syst 32(2):249–263. https://doi.org/10.1140/epjb/e2003-00095-5

Lee K, Cho KH (2002) Attenuation of peak horizontal acceleration in the Sino-Korean Craton. In: The Proceedings of the Annual Fall Conference of Earthquake Engineering Society of Korea. Reported in Klise et al (2017)

Lee JS, Navarro CM, Tolbert N, et al (2019) Interdependent networked community resilience modeling environment (INCORE). In: Proceedings of the practice and experience in advanced research computing on rise of the machines (learning). Association for Computing Machinery, New York, pp 1–2. https://doi.org/10.1145/3332186.3333150

Lemnitzer A, Arduino P, Dafni J, Franke KW, Martinez A, Mayoral J, El Mohtar C, Pehlivan M, Yashinsky M (2021) The September 19, 2017 MW 7.1 CENTRAL-Mexico earthquake: immediate observations on selected infrastructure systems. Soil Dyn Earthq Eng 141:106430. https://doi.org/10.1016/j.soildyn.2020.106430

Li M, Ye T, Shi P, Fang J (2015) Impacts of the global economic crisis and Tohoku earthquake on Sino–Japan trade: a comparative perspective. Nat Hazards 75(1):541–556. https://doi.org/10.1007/s11069-014-1335-9

Makhoul N, Navarro C, Lee JS, Gueguen P (2020) A comparative study of buried pipeline fragilities using the seismic damage to the Byblos wastewater network. Int J Disaster Risk Reduct 51:101775. https://doi.org/10.1016/j.ijdrr.2020.101775

Martins L, Silva V (2021) Development of a fragility and vulnerability model for global seismic risk analyses. Bull Earthq Eng 19:6719–6745. https://doi.org/10.1007/s10518-020-00885-1

Mazumder RK, Salman AM, Li Y, Yu X (2021) Asset management decision support model for water distribution systems: impact of water pipe failure on road and water networks. J Water Resour Plan Manag 147:04021022. https://doi.org/10.1061/(ASCE)WR.1943-5452.0001365

Nakashima M, Lavan O, Kurata M, Luo Y (2014) Earthquake engineering research needs in light of lessons learned from the 2011 Tohoku earthquake. Earthq Eng Eng Vib 13(1):141–149. https://doi.org/10.1007/s11803-014-0244-y

Newman ME (2003) The structure and function of complex networks. SIAM Rev 45(2):167–256. https://doi.org/10.1016/S0010-4655(02)00201-1

OpenStreetMap Contributors (2017) Planet dump. Retrieved from https://planet.osm.org

Ouyang M (2014) Review on modeling and simulation of interdependent critical infrastructure systems. Reliab Eng Syst Saf 121:43–60. https://doi.org/10.1016/j.ress.2013.06.040

Pagani M, Monelli D, Weatherill G, Danciu L, Crowley H, Silva V, Henshaw P, Butler L, Nastasi M, Panzeri L, Simionato M, Vigano D (2014) OpenQuake engine: an open hazard (and risk) software for the Global Earthquake Model. Seismol Res Lett 85(3):692–702. https://doi.org/10.1785/0220130087

Pagani M, Garcia-Pelaez J, Gee R, Johnson K, Poggi V, Silva V, Simionato M, Styron R, Viganò D, Danciu L, Monelli D (2020) The 2018 version of the Global Earthquake Model: hazard component. Earthq Spectra 36(1_suppl):226–251. https://doi.org/10.1177/8755293020931866

PCCIP (1997) Critical foundations: protecting America’s infrastructures, report of the President’s commission on critical infrastructure protection

Pitilakis K, Crowley H, Kaynia AM (eds) (2014a). Springer, Dordrecht

Pitilakis K, Franchin P, Khazai B, Wenzel H (eds) (2014b). Springer, Dordrecht. https://doi.org/10.1007/978-94-017-8835-9

Poljanšek K, Bono F, Gutiérrez E (2012) Seismic risk assessment of interdependent critical infrastructure systems: the case of European gas and electricity networks. Earthq Eng Struct Dyn 41:61–79. https://doi.org/10.1002/eqe.1118

Pribadi KS, Abduh M, Wirahadikusumah RD, Hanifa NR, Irsyam M, Kusumaningrum P, Puri E (2021) Learning from past earthquake disasters: the need for knowledge management system to enhance infrastructure resilience in Indonesia. Int J Disaster Risk Reduct 64:102424. https://doi.org/10.1016/j.ijdrr.2021.102424

Romero N, O’Rourke TD, Nozick LK, Davis CA (2010) Seismic hazards and water supply performance. J Earthquake Eng 14(7):1022–1043. https://doi.org/10.1080/13632460903527989

Roumelioti Z, Theodulidis N, Kiratzi A (2007) The 20 June 1978 Thessaloniki (Northern Greece) earthquake revisited: slip distribution and forward modeling of geodetic and seismological observations. In: 4th International conference on earthquake geotechnical engineering, pp 25–28

Sathurshan M, Saja A, Thamboo J, Haraguchi M, Navaratnam S (2022) Resilience of critical infrastructure systems: a systematic literature review of measurement frameworks. Infrastructures 7(5):67. https://doi.org/10.3390/infrastructures7050067

Sharma N, Nocera F, Gardoni P (2021) Classification and mathematical modeling of infrastructure interdependencies. Sustain Resilient Infrastruct 6(1–2):4–25. https://doi.org/10.1080/23789689.2020.1753401

Silva V, Horspool N (2019) Combining USGS ShakeMaps and the OpenQuake-engine for damage and loss assessment. Earthq Eng Struct Dyn 48(6):634–652

Silva V, Crowley H, Pagani M, Monelli D, Pinho R (2014) Development of the OpenQuake engine, the Global Earthquake Model’s open-source software for seismic risk assessment. Nat Hazards 72(3):1409–1427. https://doi.org/10.1007/s11069-013-0618-x

Silva V, Akkar S, Baker J, Bazzurro P, Castro JM, Crowley H, Dolsek M, Galasso C, Lagomarsino S, Monteiro R, Perrone D, Pitilakis K, Vamvatsikos D (2019) Current challenges and future trends in analytical fragility and vulnerability modeling. Earthq Spectra 35(4):1927–1952. https://doi.org/10.1193/042418EQS101O

Silva V, Amo-Oduro D, Calderon A, Costa C, Dabbeek J, Despotaki V, Martins L, Pagani M, Rao A, Simionato M, Viganò D (2020) Development of a global seismic risk model. Earthq Spectra 36(1_suppl):372–394. https://doi.org/10.1177/8755293019899953

Smerzini C, Pitilakis K (2018) Seismic risk assessment at urban scale from 3D physics-based numerical modeling: the case of Thessaloniki. Bull Earthq Eng 16(7):2609–2631. https://doi.org/10.1007/s10518-017-0287-3

SRMLIFE (2007) Development of a global methodology for the vulnerability assessment and risk management of lifelines, infrastructures and critical facilities. Application to the metropolitan area of Thessaloniki. Research project, General Secretariat for Research and Technology, Greece

Todd DR, Carino NJ, Chung RM, Lew HS, Taylor AW, Walton WD (1994) 1994 Northridge earthquake: performance of structures, lifelines and fire protection systems. https://doi.org/10.6028/NIST.IR.5396

Toro GR, Abrahamson NA, Schneider JF (1997) Model of strong ground motions from earthquakes in Central and Eastern North America: best estimates and uncertainties. Seismol Res Lett 68:41–57. https://doi.org/10.1785/gssrl.68.1.41

United Nations (2019) World Urbanization Prospects 2018: Highlights

Van Breugel K (2017) Societal burden and engineering challenges of ageing infrastructure. Procedia Eng 171:53–63. https://doi.org/10.1016/j.proeng.2017.01.309

Weatherill GA, Silva V, Crowley H, Bazzurro P (2015) Exploring the impact of spatial correlations and uncertainties for portfolio analysis in probabilistic seismic loss estimation. Bull Earthq Eng 13:957–981. https://doi.org/10.1007/s10518-015-9730-5

Weatherill G, Esposito S, Iervolino I, Franchin P, Cavalieri F (2014) Framework for seismic hazard analysis of spatially distributed systems. In: SYNER-G: systemic seismic vulnerability and risk assessment of complex urban, utility, lifeline systems and critical facilities. Springer, Dordrecht, pp 57–88. https://doi.org/10.1007/978-94-017-8835-9

Worden CB, Thompson EM, Baker JW et al (2018) Spatial and spectral interpolation of ground-motion intensity measure observations. Bull Seismol Soc Am 108:866–875. https://doi.org/10.1785/0120170201

Yepes-Estrada C, Silva V, Rossetto T, D’Ayala D, Ioannou I, Meslem A, Crowley H (2016) The Global Earthquake Model physical vulnerability database. Earthq Spectra 32(4):2567–2585. https://doi.org/10.1193/011816EQS015DP

Yu YX, Jin CY (2008) Empirical peak ground velocity attenuation relations based on digital broadband records. In: 14th World Conference on Earthquake Engineering. Reported in Klise et al 2017

Acknowledgements

The present work has been done in the framework of Grant Agreement No. 813137 funded by the European Commission ITN-Marie Sklodowska-Curie project “New Challenges for Urban Engineering Seismology (URBASIS-EU)”. Also, we would like to acknowledge the contributors of the SYNER-G project that was funded from the European Community’s 7th Framework Program under Grant No. 244061 from which many conceptual frameworks have been built upon.

Funding

Open access funding provided by HEAL-Link Greece. The work has been carried out under the framework of the European Commission ITN-Marie Sklodowska-Curie URBASIS-EU project (Grant Agreement No. 813137) from which the first author, Astha Poudel, has received the funding.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Poudel, A., Pitilakis, K., Silva, V. et al. Infrastructure seismic risk assessment: an overview and integration to contemporary open tool towards global usage. Bull Earthquake Eng 21, 4237–4262 (2023). https://doi.org/10.1007/s10518-023-01693-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10518-023-01693-z