Abstract

This paper presents an assessment of the seasonal prediction skill of current global circulation models, with a focus on the two-meter air temperature and precipitation over the Southeast United States. The model seasonal hindcasts are analyzed using measures of potential predictability, anomaly correlation, Brier skill score, and Gerrity skill score. The systematic differences in prediction skill of coupled ocean–atmosphere models versus models using prescribed (either observed or predicted) sea surface temperatures (SSTs) are documented. It is found that the predictability and the hindcast skill of the models vary seasonally and spatially. The largest potential predictability (signal-to-noise ratio) of precipitation anywhere in the United States is found in the Southeast in the spring and winter seasons. The maxima in the potential predictability of two-meter air temperature, however, reside outside the Southeast in all seasons. The largest deterministic hindcast skill over the Southeast is found in wintertime precipitation. At the same time, the boreal winter two-meter air temperature hindcasts have the smallest skill. The large wintertime precipitation skill, the lack of corresponding two-meter air temperature hindcast skill, and a lack of precipitation skill in any other season are features common to all three types of models (atmospheric models forced with observed SSTs, atmospheric models forced with predicted SSTs, and coupled ocean–atmosphere models). Atmospheric models with observed SST forcing demonstrate a moderate skill in hindcasting spring-and summertime two-meter air temperature anomalies, whereas coupled models and atmospheric models forced with predicted SSTs lack similar skill. Probabilistic and categorical hindcasts mirror the deterministic findings, i.e., there is very high skill for winter precipitation and none for summer precipitation. When skillful, the models are conservative, such that low-probability hindcasts tend to be overestimates, whereas high-probability hindcasts tend to be underestimates.

Similar content being viewed by others

References

Barnston AG (1994) Linear statistical short-term climate predictive skill in the Northern Hemisphere. J Climate 7:1513–1564

Brankovic C, Palmer TN (2000) Seasonal skill and predictability of ECMWF PROVOST ensembles. Q J Roy Meteor Soc 126(567):2035–2067

Cocke S, LaRow TE, Shin DW (2007) Seasonal rainfall predictions over the southeast United States using the Florida State University nested regional spectral model. J Geophys Res 112. doi:10.1029/2006JD007535

Gates WL, Boyle J, Cove C, Dease C, Doutriaux C, Drach R, Fiorino M, Gleckler P, Hnilo J, Marlais S, Phillips T, Potter G, Santer BD, Sperber KR, Taylor K, Williams D (1999) An overview of the results of the Atmospheric Model Intercomparison Project (AMIP I). Bull Amer Meteorol Soc 80:29–55

Gerrity JP (1992) A note on Gandin and Murphy’s equitable skill score. Mon Wea Rev 120:2709–2712

Hardy JW, Henderson KG (2003) Cold front variability in the southern United States and the influence of atmospheric teleconnection patterns. Phys Geogr 24:120–137

Higgins RW, Leetma A, Xue Y, Barnston A (2000) Dominant factors influencing the seasonal predictability of US precipitation and surface air temperature. J Climate 13:3994–4017

Kanamitsu M, Ebisuzaki W, Woollen J, Yang S-K, Hnilo JJ, Fiorino M, Potter GL (2002) NCEP-DOE AMIP-II reanalysis (R-2). Bull Amer Meteorol Soc 83:1631–1643

Kiladis GN, Diaz HF (1989) Global climate extremes associated with extremes of the Southern oscillation. J Climate 2:1069–1090

Kirtman BP, Shukla J, Balmaseda M, Graham N, Penland C, Xue Y, Zebiak S (2002) Current status of ENSO forecast skill: a report to the Climate Variability and Predictability (CLIVAR) Numerical Experimentation Group (NEG). CLIVAR Working group on seasonal to interannual prediction. p 31

Kumar A, Hoerling MP (1995) Prospects and limitations of seasonal atmospheric GCM predictions. Bull Amer Meteorol Soc 76:335–345

Liou C-S, Chen J-H, Terng C-T, Wang F-J, Fong C-T, Rosmond TE, Kuo H-C, Shiao C-H, Cheng M-D (1997) The second-generation global forecast system at the Central Weather Bureau in Taiwan. Weather Forecast 12(3):653–663

Markowski GR, North GR (2003) Climatic influence of sea surface temperature: evidence of substantial precipitation correlation and predictability. J Hydromet 4:856–877

Misra V, Dirmeyer PA (2009) Air, sea, and land interactions of the continental US hydroclimate. J Hydromet 10:353–373

Murphy AH (1973) A new vector partition of the probability score. J Appl Meteor 12:595–600

Reynolds RW, Rayner NA, Smith TM, Stokes DC, Wang W (2002) An improved in situ and satellite SST analysis for climate. J Climate 15:1609–1625

Ropelewski CF, Halpert MS (1986) North American precipitation and temperature patterns associated with the El Nino/Southern Oscillation (ENSO). Mon Wea Rev 114:2352–2362

Ropelewski CF, Halpert MS (1987) Global and regional scale precipitation patterns associated with the El Niño/Southern Oscillation. Mon Wea Rev 115:1606–1626

Saha S, Nadiga S, Thiaw C, Wang J, Wang W, Zhang Q, van den Dool HM, Pan H-L, Moorthi S, Behringer D, Stokes D, Peña M, Lord S, White G, Ebisuzaki W, Peng P, Xie P (2006) The NCEP climate forecast system. J Climate 19:3483–3517

Schneider EK (2002) Understanding differences between the equatorial Pacific as simulated by two coupled GCMs. J Climate 15:449–469

Shneerov BE, Meleshko VP, Matjugin VA, Spryshev PV, Pavlova TV, Vavulin SV, Shkolnik IM, Subov VA, Gavrilina VM, Govorkova VA (2002) The current status of the MGO global atmospheric circulation model (version-MGO-03). MGO Procceeding 550:3–43

Shukla J (1998) Predictability in the midst of chaos: a scientific basis for climate forecasting. Science 282(5389):728–731

Shukla J, Anderson J, Baumhefner D, Brankovic C, Chang Y, Kalnay E, Marx L, Palmer T, Paolino D, Ploshay L, Schubert S, Straus D, Suarez M, Tribbia J (2000) Dynamical seasonal prediction. Bull Amer Meteor Soc 81(11):2593–2606

Smith RL, Blomley JE, Meyers G (1991) A univariate statistical interpolation scheme for subsurface thermal analyses in the tropical oceans. Prog Oceanogr 28:219–256

Trosnikov IV, Kaznacheeva VD, Kiktev DB, Tolstikh MA (2005) Assessment of potential predictability of meteorological variables in dynamical seasonal modeling of atmospheric circulation on the basis of semi-Lagrangian model SL-AV. Russian Meteorol Hydrol 12

Wang G, Alves O, Hudson D, Hendon H, Liu G, Tseitkin F (2008) SST skill assessment from the new POAMA-1.5 system. BMRC Res Lett 8:2–6

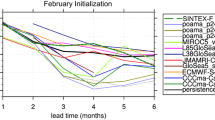

Wang B, Lee J-Y, Kang I-S, Shukla J, Park C-K, Kumar A, Schemm J, Cocke S, Kug J-S, Luo J-J, Zhou T, Wang B, Fu X, Yun W-T, Alves O, Jun EK, Kinter J, Kirtman B, Krishnamurti T, Lau NC, Lau W, Liu P, Peigon P, Rosati T, Schubert S, Stern W, Suarez M, Yamagata T (2009) Advance and prospectus of seasonal prediction: assessment of the APCC/CliPAS 14-model ensemble retrospective seasonal prediction (1980–2004). Clim Dyn 33:93–117

Weng S-P, Tung Y-C, Huang W-H (2005) Predictions of global sea surface temperature anomalies: introduction of CWB/OPGSST1.1 Forecast System. Proceedings, Conference on Weather Analysis and Forecasting, Taipei, Taiwan, pp 341–345

Winsberg MD (2003) Florida weather. University Press of Florida. p 218

Xie P, Arkin PA (1996) Global precipitation: a 17-year monthly analysis based on gauge observations, satellite estimates, and numerical model outputs. Bull Amer Meteor Soc 78:2539–2558

Acknowledgments

We thank the various model providers for making available, through APEC Climate Center (APCC), the hindcast datasets used in this study. We thank Ms. Kyong Hee An for facilitating the data access and for providing associated documentation and Ms. Kathy Fearon for her careful reading of the manuscript and helpful editorial comments. All model data used in this study are available online from APCC (http://www.apcc21.net). This research was supported by NOAA grant NA07OAR4310221 and USDA grant 2088-38890-19013.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Potential predictability

Following Kumar and Hoerling (1995), consider an ensemble of forecasts for any forecast variable A. Let there be I = 1, N ensemble members with α = 1,M years of external forcing. The ensemble mean forecast, or the most likely outcome, for a given year α is then

The internal variance, or spread, of the ensemble members around this mean is

Since the spread can be dependent on the particular choice of year, the internal variance is then \( {\sigma_{\alpha }^{2} } \)averaged over all possible α, or

The external variance is an estimate of the degree to which the difference between the ensemble mean forecast for different years is due to the boundary conditions rather than to “chance”; thus it is a measure of the forecast’s ability to distinguish between different regimes associated with different boundary conditions. The overall mean forecast, averaged over all realizations and boundary conditions, is given by

then the external variance is given by

The total variance of the system is then

By estimating the ratio of \( {\sigma_{E}^{ 2} } \) to \( {\sigma_{T}^{ 2} } \) or \( {\sigma_{I}^{ 2} } \) we can then judge what part of the observed signal is due to boundary conditions and what part is due to the uncertainty of initial conditions, i.e., is effectively noise. The larger the ratio, the higher the predictability inherent to the system. The values of the ratio range between zero and one. In the case of zero, the ensemble does not see the boundary conditions, i.e., the outcome is entirely due to noise. In the case of one, the boundary conditions overwhelmingly mask out the effect of uncertainty of initial conditions.

The distance between the centroids of distributions for two different boundary condition regimes is a representation of the system’s external variability. The sharpness of the distribution associated with a particular regime is representative of the internal variance and represents the range of possible incomes associated with the particular boundary conditions. If the distributions are well separated, either because they are narrow or because their center points are far apart, the two states can be easily distinguished. If the reason for the distinguishability is that the curves are narrow, it can be said that the predictability is due to the internal variability being low. If what makes the distinction possible is that the two states are far apart, then the predictability is attributable to the large external variance.

Appendix 2: Brier skill score and reliability diagram

A probabilistic forecast is one that estimates the probability of occurrence of a chosen event ℰ, such as a precipitation rate anomaly relative to the mean state exceeding a preselected threshold level. For an ensemble of equally reliable models the probability P of the event ℰ is \( {(m/M) \times 100}, \) where m is the number of ensemble members forecasting ℰ, and M is the total number of ensemble forecasts. Since for a single realization a probability forecast is neither correct nor wrong, probability forecasts are verified by analyzing the joint (statistical) distribution of forecasts and observations.

The Brier score measures the magnitude of the probability forecast errors. It is defined as

where the index k refers to the forecast/observation pairs, and n is the total number of such pairs within the data set, and both the forecast (f) and the observations (o) are in terms of probabilities. The lowest possible value of the Brier score is zero, and it can only be achieved with a perfect deterministic forecast.

Let the probabilistic forecast for ℰ be done within I discrete categories y i . The frequency with which forecasts of y i are issued is p(y i ). The frequency within a category y i forecast with which the event ℰ actually occurs is the conditional frequency \( {\overline{{o_{i} }} = p\left( {o(k) = 1|y_{i} } \right)} \). A reliability diagram is a plot of \( {\overline{{o_{i} }} } \) versus y i , accompanied by the forecast frequency distribution p(y i ) versus y i . For a perfect forecast, the reliability diagram would be a line at 45°.

As suggested by Murphy (1973), it is useful to decompose the Brier score into three terms: reliability, resolution, and uncertainty:

where \( \overline{o} = \frac{1}{n}\sum\nolimits_{k = 1}^{n} {o(k)} \) is the unconditional mean frequency of occurrence of the event ℰ.

The reliability term evaluates the statistical accuracy of the forecast–a perfectly reliable forecast is one for which the observed conditional frequency \( {\overline{{o_{i} }} } \) is equal to the forecast probability (i.e., over all forecast for y percent chance of ℰ, ℰ will occur in y percent of the times). The resolution term addresses the distance between the forecast frequency and the unconditional climatological frequency. Forecasts that are always close to the climatological frequency exhibit good reliability (since the forecast frequency matches the observed frequency) but poor resolution (since they are not able to distinguish between different regimes). The uncertainty term is a measure of the variability of the system and is not influenced by the forecast. The Brier skill score is calculated with respect to a reference forecast as

For a perfect forecast system, \( {BS = BS_{\text{rel}} = BS_{\text{res}} = 1} \), while for a climatological forecast \( {BS = BS_{\text{rel}} = BS_{\text{res}} = 0} \).

Rights and permissions

About this article

Cite this article

Stefanova, L., Misra, V., O’Brien, J.J. et al. Hindcast skill and predictability for precipitation and two-meter air temperature anomalies in global circulation models over the Southeast United States. Clim Dyn 38, 161–173 (2012). https://doi.org/10.1007/s00382-010-0988-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00382-010-0988-7